SEO Spider

How To Use The SEO Spider For Broken Link Building

If you’ve not heard of ‘broken link building’ before, it’s essentially a tactic that involves letting a webmaster know about broken links on their site and suggesting an alternative resource (perhaps your own site or a particular piece of content, alongside any others).

There’s a couple of ways that link builders approach this, which include –

- Collecting a big list of ‘prospects’ such as resource pages or pages around a particular content theme or search phrase. Then checking these pages for broken links.

- Another method is simply picking a single site, checking the entirety of it for relevant resource pages and broken links (and potentially creating content that will allow you to suggest your own site).

I don’t want to dig to deep into the entire process, you can read a fantastic guide over here on Moz by Russ Jones. However, as we get asked this question an awful lot, I wanted to explain how you can use the Screaming Frog SEO Spider tool to help scale the process, in particular for the first method listed above.

1) Switch To List Mode

When you have your list of relevant prospects you wish to check for external broken links, fire up the Screaming Frog SEO Spider & switch the mode from ‘Spider’ to ‘List’.

2) Remove The Crawl Depth

By default in list mode the crawl depth is ‘0’, meaning only the URLs in your list will be crawled.

However, we need to crawl the external URLs from the URLs in the list, so remove the crawl depth under ‘Configuration > Spider > Limits’ by unticking the configuration.

3) Choose To Only Crawl & Store External Links

With the crawl depth removed, the SEO Spider will now crawl the list of URLs, and any links it finds on them (internal and external, and resources).

So, next up you need to restrict the SEO Spider to just crawl external links from the list of URLs. You don’t want to waste time crawling internal links, or resources such as images, CSS, JS etc.

So under ‘Configuration > Spider > Crawl’, keep only ‘External Links’ enabled and untick all other resource and page link types.

This will mean only the URLs uploaded and the external links found on them will be stored and crawled.

4) Upload Your URLs & Start The Crawl

Now copy your list of URLs you want to check, click ‘Upload > Paste’ and the SEO Spider will crawl the URLs, reach 100% and come to a stop.

5) View Broken Links & Source Pages

To view the discovered external broken links within the SEO Spider, click on the ‘Response Codes’ tab and ‘Client Error (4XX)’ filter. They will display a ‘404’ status code.

To see which page from the original URL list uploaded has the broken links on them, use the ‘Inlinks’ tab at the bottom. Click on a URL in the top window pane and then click on the ‘Inlinks’ tab at the bottom to populate the lower window pane.

You can click on the above to view a larger image.

As you can see in this example, there is a broken link to the BrightonSEO website (https://www.brightonseo.com/people/oliver-brett/), which is linked to from this page – https://www.screamingfrog.co.uk/2018-a-year-in-review/.

6) Export Them Using ‘Bulk Export > All External Links’

This export will contain all URLs in the list uploaded, as well as their external links and various response codes.

7) Open Up In A Spreadsheet & Filter The Status Code for 4XX

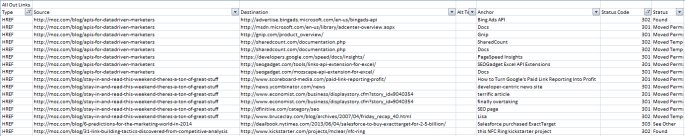

The seed list of URLs uploaded are the source URLs in column B, while their external links which we want to check for broken links are the destination URLs in column C. If you filter the ‘status code’ column, you may see some ‘404’ broken links.

Here’s a quick screenshot of a dozen blog URLs I uploaded from our website and a few well know search marketing blogs (click for a larger image as it’s rather small).

So that’s it, you have a list of broken links against their sources for your broken link building. You can stop reading now, but just checking for 4XX errors will mean you miss out on further opportunities to explore.

This is because URLs might not 404 error correctly, or immediately. Quite often a URL will 302 (301, or 303) once or multiple times before reaching a final 404 status. Some URLs will also respond with a ‘no response’, such as a ‘DNS lookup failed’ if they no longer exist at all. So scan through the URLs under ‘Response Codes > No Response’ and check the status codes for further prospecting opportunities.

For 3XX responses, auditing these at scale is a little more involved, but quick and easy with the right process as outlined below.

1) Filter For 3XX Responses In The ‘Destination URL’ Column

Using the same ‘External Links’ spread sheet, scan the ‘destination URLs’ list for anything unnecessary to crawl. It will undoubtedly contain links like Twitter, Facebook, LinkedIn and login URLs etc which all redirect. Run a quick filter on this column and mass delete all the rubbish from the list to help save time from crawling them.

2) Save This New 3XX List

You’ll need this list later to potentially match back the destination URL which is 3XX’ing to its originating source URL. This is what was left in my list after cleaning up, which we need to audit.

3) Now Audit Those Redirects

Follow the process outlined in the ‘How To Audit Redirects‘ guide by saving the ‘destination URLs’ into a new list and crawling until their final target URL using the ‘always follow redirects‘ configuration to discover any broken links.

The ‘All Redirects’ report will provide a complete view of the hops and display the final destination URL.

4) Match The 4XX Errors Discovered Against Your Saved 3XX List Source URLs

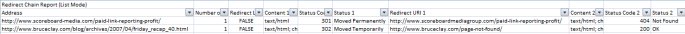

The ‘All Redirects’ report may contain 4XX errors you would have missed if you hadn’t audited the 3XX responses. For example, here are a couple more I discovered using this method –

The above contains a URL which 301’s to a 404 and another with a soft 404, a 302 to a 200 response. With this report you can match the ‘address’ URLs in ‘column A’ back to the ‘destination URLs’ and subsequent ‘source URLs’ from your saved 3XX list. Both of the above in this example come from the same blog post for example.

Hopefully the above process helps make broken link building more efficient. Please just let us know if you have any questions in the comments as usual.

Please Remember!

This post is specifically about using the SEO Spider for broken link building. If you’re just looking to discover broken links on a single website, read our guide on How To Find Broken Links.