Custom JavaScript

Daniel Sturrock

Posted 7 May, 2024 by Daniel Sturrock in

Custom JavaScript

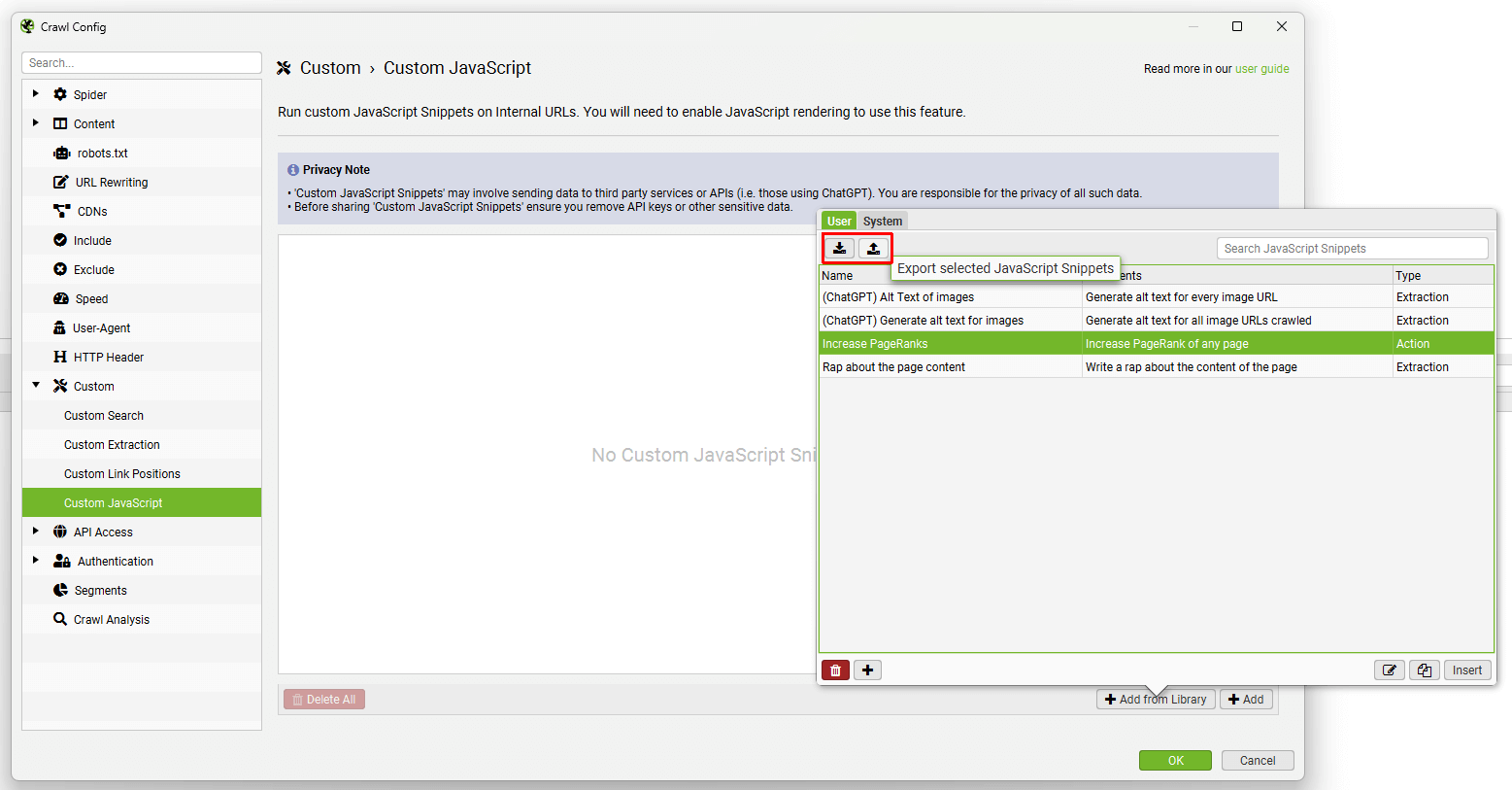

Configuration > Custom > Custom JavaScript

Privacy Note

- ‘Custom JavaScript Snippets’ may involve sending data to third party services or APIs (i.e. those using ChatGPT). You are responsible for the privacy of all such data.

- Before sharing ‘Custom JavaScript Snippets’ ensure you remove API keys or other sensitive data.

Introduction

Custom JavaScript allows you to run JavaScript code on each internal 200 OK URL crawled (except for PDFs).

You can extract all sorts of useful information from a web page that may not be available in the SEO Spider, as well as communicate with APIs such as OpenAI’s ChatGPT, local LLMs, or other libraries. You have the ability to save URL content to disk and write to text files on disk.

To set up custom JavaScript snippet, click ‘Config > Custom > JavaScript’. Then ‘Add’ to start setting up a new snippet, or ‘Add from Library’ to choose an existing snippet.

The library includes example snippets to perform various actions to act as inspiration of how the feature can be used, such as –

- Sentiment, intent or language analysis of page content.

- Generating image alt text for images.

- Triggering mouseover events.

- Scrolling a page (to crawl some infinite scroll set ups).

- Extracting embeddings from page content.

- Downloading and saving various content locally (like images).

And much more.

You can adjust our templated snippets by following the comments in them.

You can set a content type filter which will allow the Custom JavaScript Snippet to execute for certain content types only.

The results will be displayed in the Custom JavaScript tab.

There are 2 types of Snippet; Extraction and Action.

Extraction Snippets

- Extraction type Snippets return a value or list of values (numbers or strings), and display the values as columns in the Custom JavaScript tab. Each value in a list of values will be mapped across columns in the tab.

- When executing Extraction Snippets, the page will stop loading all resources and start executing the snippet. The SEO Spider will not complete the page crawl until the snippet has completed. Long running Snippets may timeout and the page will fail to be crawled.

- Extraction Snippets also have the ability to download URLs and write to a text file. For example we have a sample Snippet that can download all images from a web page and another sample Snippet that appends all adjectives on a web page to a CSV file.

Action Snippets

- Action type Snippets do not return any data but only perform actions. For example, we have a sample Snippet that scrolls down a web page allowing lazy loaded images to be crawled.

- When executing Action Snippets, the page will continue loading resources while the Action Snippet is executing. You must however give a timeout value in seconds for the Snippet. When the timer expires, the SEO Spider will complete the page crawl.

Important points to note

- You can run multiple Snippets at once. Please be aware that your crawl speed will be affected by the number and type of Snippets that you run.

- If you have multiple Snippets, all of the Action Snippets are performed before the Extraction Snippets.

- If you have multiple Action Snippets with different timeout values, the SEO Spider will use the maximum timeout value of all of the Action Snippets.

- Extraction Snippets stop all page loads so no more requests will happen. Action Snippets don’t have this limitation but you need to set a timeout value.

- Snippets have access to the Chrome Console Utilities API. This allows Snippets to use methods such as getEventListeners() which are not accessible via regular JavaScript on a web page. See the ‘Trigger mouseover events’ sample Snippet for an example of this.

Extraction Snippet API Usage

For Extraction Snippets, you interact with the SEO Spider using the seoSpider object which is an instance of the SEOSpider class documented below. In the most basic form you use it as follows:

// The SEO Spider will display '1' in a single column

return seoSpider.data(1);// The SEO Spider will display each number in a separate column

return seoSpider.data([1, 2, 3]);// The SEO Spider will display 'item1' in a single column

return seoSpider.data("item1");// The SEO Spider will display each string in a separate column

return seoSpider.data(["item1", "item2"]);

You can also send back data to the SEO Spider from a Promise. The SEO Spider will wait for the Promise to be fulfilled. This allows you to do asynchronous work like fetch requests before returning data to the SEO Spider. For example:

let promise = new Promise(resolve => {

setTimeout(() => resolve("done!"), 1000);

});

// sends "done!" to the SEO Spider after 1 second

return promise.then(msg => seoSpider.data(msg));

Please Note

Note that in all of the above Extraction Snippet examples, even for the Promise example, you MUST call the ‘return’ statement to end function execution. This is because all Snippet code is implicitly wrapped by the SEO Spider in an IIFE (Immediately Invoked Function Expression). This is to avoid JavaScript global namespace clashes when running snippets. If you don’t do this, then the SEO Spider will not receive any data.

The example below shows how your JavaScript Snippet code is implicitly wrapped in an IIFE. It also shows how the seoSpider instance is created for you just before your code is inserted.

(function () {

// seoSpider object created for use by your snippet

const seoSpider = new SEOSpider();

// Your JavaScript Snippet code is inserted here i.e:

return seoSpider.data("data");

})();

SEOSpider Methods

This class provides methods for sending back data to the SEO Spider. Do not call new on this class, an instance is supplied for you called seoSpider.

data(data)

Pass back the supplied data to the SEO Spider for display in the Custom JavaScript tab. The data parameter can be a string or an number, or a list of strings or numbers. If the data is a list, then each item in the list will be shown in a separate column on the Custom JavaScript tab.

Parameters:

Example:

// Get all H1 and H2 headings from page

let headings = Array.from(document.querySelectorAll("h1, h2"))

.map(heading => heading.textContent.trim());

return seoSpider.data(headings);

error(msg)

Pass back any error messages to the SEO Spider. These messages will appear in a column of the Custom JavaScript tab.

Parameters:

Example:

return functionThatReturnsPromise()

.then(success => seoSpider.data(success))

.catch(error => seoSpider.error(error));

}

saveText(text, saveFilePath, shouldAppend)

Saves the supplied text to saveFilePath.

Parameters:

Example:

return seoSpider.saveText('some text', '/Users/john/file.txt', false);

saveUrls(urls, saveDirPath)

Downloads the supplied list of URLs and saves each of them to the saveDirPath.

Parameters:

Example:

return seoSpider.saveUrls(['https://foo.com/bar/image.jpeg'], '/Users/john/');

Note:

Each URL supplied in the ‘urls’ parameter will be saved in a directory structure that follows the URL path. For example, in the above example with a URL of:

'https://foo.com/bar/image.jpeg'

and a ‘saveDirPath’ of:

'/Users/john/'

Then the URL will be saved into the following folder structure:

'/Users/John/https/foo.com/bar/image.jpeg'

loadScript(src) → {Promise}

Loads external scripts for use by the Snippet. The script loads asynchronously. You write your code inside the ‘then’ clause as shown in the example below.

Parameters:

Example:

return seoSpider.loadScript("your_script_url")

.then(() => {

// The script has now loaded, you can start using it from here

...

// Return data to the SEO Spider

return seoSpider.data(your_data)

})

.catch(error => seoSpider.error(error));

Share Your Snippets

You can set up your own snippets, which will be saved in your user library, and then export/import the library as JSON to share with colleagues.

JavaScript snippets can also be saved in your configuration.

Please do not forget to remove any sensitive data, such as any API keys pre to sharing with others.

Debugging Snippets

When using Custom JavaScript, you may encounter issues with preset JavaScript snippets or your own custom JavaScript that require debugging.

Please read our How to Debug Custom JavaScript Snippets tutorial, which walks you through the debugging process and common errors.

Snippet Support

Due to the technical nature of this feature, unfortunately we are not able to provide support for writing and debugging your own custom JavaScript snippets.