How Accurate Are Website Traffic Estimators?

Patrick Langridge

Posted 13 June, 2016 by Patrick Langridge in SEO

How Accurate Are Website Traffic Estimators?

If you’ve worked at an agency for any significant amount of time, and particularly if you’ve been involved in forecasting, proposals or client pitches, you’ve likely been asked at least one of (or a combination or amalgamation of) the following questions:

- How much traffic can I expect to receive?

- How long until I see X amount of organic visits?

- What traffic will I receive from X investment?

- What organic opportunity is available within our industry?

- How much traffic do my competitors receive?

Forecasting is notoriously difficult, and done badly can be misleading or even damaging. There is a myriad of assumptions, caveats and uncontrollable factors that can mean that any predictions are nothing more than a finger in the air educated estimation. Organic forecasting is difficult enough to do, that writing about and explaining it would give me sleepless nights (Kirsty Hulse wrote a better post than I ever could on the subject), so I decided to focus on questions 4 and 5 of those listed above.

Imagine this very realistic (or even familiar) scenario; a client wants to know how much traffic their competitor receives, what the potential size and opportunity of their vertical is, and how to fulfil that potential by increasing visibility and acquiring more traffic. Before being able to accurately and insightfully answer the trickier final point there, it would be useful to know what you’re competing against. Assuming you don’t have access to your client’s competitors’ analytics data, it would be useful to get an idea of their organic performance, and ideally have confidence that the data you’re looking at is at best solid.

There are a number of really great visibility tools we use at Screaming Frog (Searchmetrics and Sistrix to name a couple of favourites), but these tools choose not to speculate on traffic, instead estimating visibility based on ranking position and keyword volume/value (which doesn’t necessarily correlate to traffic). There are a number of traffic estimator tools which obviously do speculate on traffic, as well as some well-known SEO tools that have functionality or components within their suites that do the same, which got us wondering a few things;

- How accurate are traffic estimator tools?

- Do they generally under or overestimate traffic?

- Are there types of websites where their estimations are more accurate than others?

- Are there potential reasons behind this under/overestimation?

- Are there potential learnings for search marketers?

The Test

We wanted to put their accuracy to the test, so here’s what we did:

- We took organic visit data for a range of 25 websites we have access to via Google Analytics, for the months of February, March and April 2016 (January can often be an outlier for many websites, so we just selected the most recent 3-month period of relative stability). We looked exclusively at UK organic traffic only (generally, but not exclusively the 25 sites selected are primarily UK focused, but some target multiple territories or even worldwide), because some of the traffic estimator tools segment traffic by region, and don’t always cover every territory. Similarly, not all the tools we used in the test deal especially well with subdomains, so we selected root domains in our analysis.

- While we can’t disclose the websites selected, to ensure as even a test as possible within what is a fairly small sample size, we specifically selected sites that covered a range of verticals, target audiences and purposes (more on that breakdown to come). For the same reason, we also selected sites that covered a range of different traffic levels, from those which receive millions of organic visits each month, to those with just hundreds of visits. We hoped this varied selection might show trends where certain tools are more or less accurate at estimating certain types of websites’ traffic levels.

- We analysed these 25 websites using 3 tools – SimilarWeb, Ahrefs and SEMrush. We recorded organic traffic estimate numbers for each of the 25 websites, focusing on exclusively UK traffic to match up with our GA data.

- We measured actual traffic against estimated traffic for each of the 3 tools. We measured this in a number of different ways –

- Visits difference for each site using each tool.

- Percentage of visits difference for each site using each tool.

- Overall visits difference for each tool.

- Percentage of visits difference for each tool.

- Average percentage difference for each tool.

Predictions

Before sharing the results I’ll share my one real prediction; the tools would almost certainly underestimate organic traffic. This is because these traffic estimator tools have limited indexes and only track a certain amount of keywords, so can’t possibly expect to completely accurately estimate traffic. Most don’t handle the long tail well as they simply don’t have the keyword bandwidth to do so. Furthermore, the tools, much like standard forecasting CTR modelling, also assume visit numbers by ranking position of keyword volume – they *don’t* consider keyword intent, brand vs non-brand, Google answer boxes, 9-pack & 7-pack results, the Knowledge Graph etc.

The Data

SEMrush

SimilarWeb

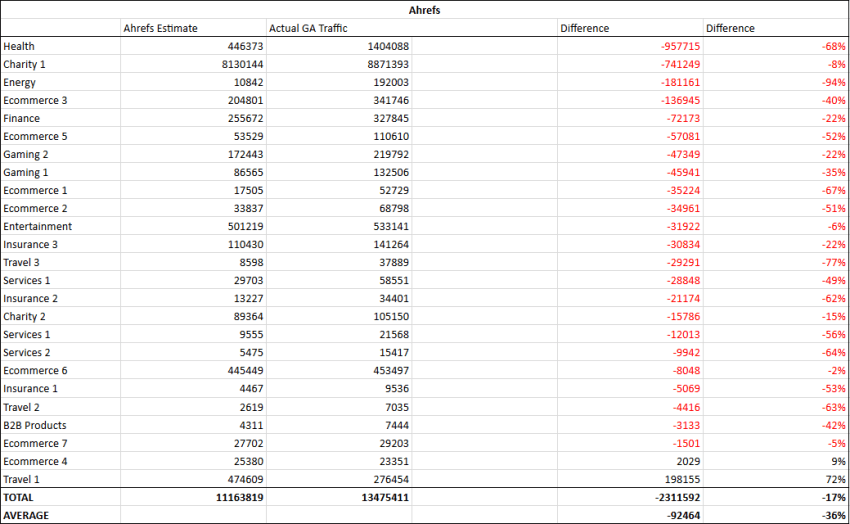

Ahrefs

You can access the data here.

The Results

- Overall the most accurate tool analysed was SimilarWeb which on average overestimated organic traffic by 1%. It overestimated total visit numbers by 17%, estimating 15.7m visits for the 25 websites, compared to the 13.4m actual. SimilarWeb was the only tool to generally overestimate traffic. Ahrefs was the next most accurate, underestimating total traffic for all sites by 17% (11.1m estimated visits compared to 13.4m actual visits), and on average underestimating traffic by 36% percent. SEMRush underestimated total traffic for all sites by 30% (9.4m estimated visits compared to 13.4m actual visits), and on average underestimated traffic by 42%.

- The site with the highest actual traffic (‘Charity 1’, 8.8m actual visits) was wildly differently estimated by the three tools; SimilarWeb – 12m estimated visits (+36%), Ahrefs – 8.1m estimated visits (-8%), SEMrush – 6.4m estimated visits (-37%). Generally speaking, Ahrefs was the most accurate tool for estimating traffic of high traffic websites.

- The most underestimated websites for each tool were: ‘Charity 1’ by SEMrush (2.4m visits difference), ‘Health’ by SimilarWeb (725k visits difference), and ‘Health’ by Ahrefs (957k visits difference). The largest percentage underestimation was ‘Energy’ by Ahrefs, which underestimated traffic by 94%. The most overestimated websites for each tool were: ‘Travel 1’ by SEMrush (132k visits difference), ‘Charity 1’ by SimilarWeb (3m visits difference), and ‘Travel 1’ by Ahrefs (198k visits difference). The largest percentage overestimation was ‘B2B Products’ by SimilarWeb, which overestimated traffic by 128%.

Further Analysis

- All three tools significantly underestimated traffic to ‘Health’, all by at least 500k visits. This site receives a lot of traffic from longer tail location specific phrases, most of which won’t likely have been picked up by the tools. Generally, the most accurately estimated sites were e-commerce, but there were many more of this type of site than any other. The most accurately estimated site across all three tools was ‘Ecommerce 7’, on average just 2% under actual traffic levels.

- ‘Travel 1’ was significantly overestimated by Ahrefs and SEMrush, by 72% and 48% respectively. Anecdotally, the site in question competes well for visibility in very competitive search results that are dominated by big brands, but they themselves are not an established brand at all. This high overestimation confirms that despite good visibility, the site in question isn’t getting sufficient clicks from the search results, due in part to a lack of brand presence.

- Generally, sites with lower traffic levels were less accurately estimated. There were three sites with under 10k organic visits (‘Insurance 1’, ‘Travel 2’ and ‘B2B Products’), and almost every estimation by each tool was at least 40% incorrect (either over or under). Only SimilarWeb got close to estimating traffic for ‘Travel 2’, underestimating by 6%.

Final Thoughts

Our test is by no means a thorough mathematical or scientific experiment, merely a quick test to try and gauge the accuracy of such tools. There are a number of ways we could improve or increase our test, including but not limited to:

- Increase the number of traffic estimator tools analysed.

- Increase the number of websites analysed.

- Use more precise analytical data (GA numbers tend to always come with a pinch of salt).

- Use an even number of website type (or analyse just a single vertical and website type).

- Increase the number of territories to US, Europe or worldwide.

Our rather limited test is just the tip of the iceberg, but has hopefully shown that all traffic estimators have strengths and weaknesses, and their level of accuracy can vary quite considerably.

Interesting. It would also be interesting to report the standard deviation between the three sets. This would help to understand the spread of inaccuracy. The data to do that is on all in the article, but as the data is in image form, it’s a ball ache to turn it back into data. Any chance of doing that?

Cheers Dixon. Have updated the post to include a link to the data (https://docs.google.com/spreadsheets/d/1GeGsD5p7s57kMIOs8aFwgYNWZxnfRAV3eNs8o7rUy64/edit#gid=0) which was even more of a ball ache to try and embed!

I love this analysis though I do wish you had thrown in one more competitor to SimilarWeb since they are collecting much different data than SEMRush and Ahrefs: Compete.com.

Since SimilarWeb and Compete are direct competitors and run rather expensive API’s it would be nice to know which horse to pick for that real user data.

Thanks Tony! Hadn’t heard of Compete.com but it looks really cool. I’ll investigate!

From the compete.com website:

Thank you from the Compete team

As of December 31, 2016, Compete.com and the Compete PRO platform have been shut down. It has been an honor and pleasure to serve you over the past 15 years and we thank you for your patronage. It’s our sincere hope that our data and services have helped you to grow your business.

Thank you for your support, The Compete team

Hi Dixon, Hi Patrick,

thank you very much for this useful data Patrick!

If my calculations are correct, then the standard deviations are as follows: SEMrush 26%, SimilarWeb 30%, Ahrefs 23%. Is it correct?

Greetings from Germany to the UK and I hope to see you again, soon.

Juan

Thanks Juan, that looks correct to me!

Cheers,

Patrick

Hi Patrick,

My Name is Pavel, i’m Product Owner at SEMRush.

Thank you for your research, it definitely gave us some food for thought. We are constantly working on improving quality of our product.

But, to be honest, results, which you got within this research are prove that it’s incorrect to operate by absolute numbers which all of tools are provide.

The main purposes of such tools are: See trends, compare performance, spy on competitors’ strategies.

So,

– The data should be considered as a snapshot of a constantly changing environment.

– if you compare two competing websites – then their relative performance can be judged very accurately.

Best regards,

Pavel

Hi Pavel,

Thanks for your feedback. Completely agree with you, as mentioned in the post it was my expectation that absolute numbers wouldn’t match, this was just a test to see how close each tool was.

Thanks,

Patrick

Very interesting research!

These are indeed questions we get a lot from current and new SEO clients and are quite difficult to answer. This method and the use of these tools make it a bit more easy and mostly more reliable to predict traffic opportunity.

Like you mention, this is just tip of the iceberg and further research would be nice. Though I also hear from experts that Similarweb is the best for this purpose.

Cheers,

Arnoud

There is a another traffic estimator worth mentioning: http://www.visitorsdetective.com/ I use it on a daily basis..

Patrick, great insight!

So, what would you recommend to use in the end? SimilarWeb, Semrush, Ahrefs?

Is it only for organic traffic? Are there any good tools for all traffic?

Thanks!

Thanks Andrew! We found that SimilarWeb is on average the most accurate, but all had varying degrees of accuracy across the board! Short answer; take them all with a big pinch of salt ;)

Great test Patrick! You made me go and test out all three tools against Google Analytics for the three websites I manage, I also used WordPress stats. The websites are for SMBs that operate in specific niches so they don’t get tons of traffic. The differences were almost 50% for Ahrefs and SW, while SEM Rush gave no results (which I attribute to the small amount of traffic for the websites). WordPress stats were the same as GA.

Thanks Theodor, interesting to hear about your experience and comparisons too!

I think it is an interesting analysis which verifies that these tools are largely useless. I do not care if the average is within a 20% accuracy, but if I can relay on whether it eatimates one website accurately ornot. With any of these tools I have no clue whether it is -50% or +100%, which means it is useless. That I see agencies and other companies out there using these tools for anything is beyond me. What is the value in a number that is completely wrong to anyone, but someone who charge money to find it?

Thanks Ulrik! I agree that it’s frustrating that the tools aren’t always super accurate, but to be fair to them, they don’t claim to be exactly accurate in terms of these measurements. They are principally to show trends and patterns, to give users a rough overview of site performance. I agree though, that in some cases the seem to be way off!

These “Metrics” and or “analytical” oriented sites are useless! None of them are accurate! I tried about 4 different kinds and one said my site was down in traffic, another said it was up in traffic, one said that my traffic was about 22K per month, another said it’s 1.6k, then the other said it’s 5000. I mean which is it?! The insights from within my Bigcommerce website, seem the most accurate with my google analytics..so I feel that Google Analytics is my best bet for checking how my most popular landing pages are doing, it even tells me where people click the most. All these other sites are useless. Why do we have so many of these sites now? Probably because anytime we feel the info is false, we look to another that will give us the answer, don’t get me started on Alexa..

I know I’m a little late here, but thank you for this! I’ve been pulling out my hair trying to figure out the best tool. I’ll have to give SimilarWeb a try. Thanks!

Great article. The last few days I have been trying to find out traffic from different sites usings SEMRush and SimilarWeb and it seems that results from SEMRush is under estimated and from SimilarWeb it is way over estimated . No doubt, it’s all a crapshoot and we just have to roll the dice on which tool we think we benefit us the most.

To me the average value has no real value. Let’s take Similarweb for instance. So by adding up the negatives and positives you end up getting 1%. But in fact overestimating is just as bad as underestimating. I would be much more interested in the delta values. From my calculations Similarweb on average was wrong by 37% (unless I made a typo when putting it in my calculator).

Thanks for that Kim, really useful. Looking forward to your own study on this, do let me know when it goes live.

There’s no need for a new study. I’m not discrediting the work you put behind this either. I’m simply pointing out that the average value alone is not very helpful. If one were to only look at the average values, he would conclude that Similarweb is roughly 40 times more accurate than Semrush, which really isn’t the case.

Based on your numbers the delta values are: Semrush = 48.6% Similarweb = 37.4% Ahrefs = 42.2%.

Thank you for putting these data together as is is interesting to have a reflection on real results and estimated ones. Nonetheless, it is statistical nonsense to calculate an average percentage of wrong. Important considerations like “is estimated traffic accuracy linked to higher or lower traffic of a site” are missing.

And be aware of, for the individual website it makes a huge difference if it is 50 % over or under reported. This may make or brake a biz.

Great article Patrick! One thing that I would improve is methodology. If SimilarWeb is usually wrong by ~30 – 130% we can’t say that it’s accuracy is 1%. I think that once you consult somebody with a background in methodology/statistics, you will see results that are even more interesting.

Thanks Patric.

Found this article during research for traffic prediction methodology. I would say all these data sources are similar.

I calculate average error margin based on Your data and here are the results:

SEMRush 48%, SimilarWeb 37% and Ahrefs 42%.

According to small data sample is difficult to predict how accurate these metrics are, but You can use GA benchmark to know a little more about averages for vertical and compare this data with external sources.

Great work on comparison table, thanks.

Interesting and thanks for sharing Patrick! But do you know that even GA would not be the best source for us to know the actual traffic. Since they always over showcase the “actual visits” to a higher number compare to the actual visits from our web server end..

Thanks Martin! Yeah that’s correct, obviously GA will also sample data so never completely accurate.

Hi, Martin,

Forgive the delay in this question and I hope you are still participating in this forum. Just out of curiosity, but how much did GA data differ from your server?

Best regards,

Arthur

Hi Patrick,

Thanks for diving into this topic. It’s worth noting that with SEMrush and Ahrefs it’s not possible to get estimates without giving up some of your personal information or starting a free trial. Similarweb will give 6 months for free but it is nearly impossible to get an account for longer durations (and incredibly expensive if you are lucky enough to be chosen).

Website IQ (https://www.websiteiq.com/) offers 9 months of free website traffic estimates (desktop only) without signing up for anything (really free). The site has 8 years of traffic data that is available with an inexpensive subscription.

Hi Patrick,

the averages maths doesn’t checkout, as both overestimates and underestimates are inaccuracies. By simply calculating the average you are cancelling negative inaccuracies with positive inaccuracies. Looking at similar web, it’s NOT the case that the tool is only 1% inaccurate on average.

For example Similarweb was 1% accurate only 1/25 times. Which means it is inaccurate 96% of the time. To compare accuracy, in this case, I’d have thought to use the mode vs. the mean. I’m sure someone else (probably more advanced in statistics! can offer a better insight).

Thank you for uploading the raw data! Looking at the tables, it shows that all the platforms are talking utter nonsense!

Steve

Thank you for the study, surprisingly there’s not a lot of those out there.

From my experience, those tools are best use to benchmark similar competitors and are not as interesting once you start comparing traffic across audience segments (localization/industries/etc).

I suspected that semrush is not that accurate and you brought in great data to confirm it. Thanks a lot for your effort. Would you know why cloudflare shows more visits than google analytics?

Isn’t there any 2019 updated research?

Hi Tony

Nice information thanks alot

It’s a good guide for beginners

Hi Patrick. I started typing an answer but I then realized that others already made similar comments… Using the raw data (thanks for the access): Similarweb remains the best, with an average variation of 37% (average of individual averages, in absolute terms), against 42 for Ahref and 49% for Semrush. But Similarweb has a more balanced behaviour: it overstimated in 40% of teh cases. (8% Ahref and 12% Semrush) . And this is not good. Because, as you rightly state, we use these tools to compare trends. When comparing my site with a competitor, it is more likely that they will be overestimated when I am underestimated with Similarweb. In other words, the “shape” of the mistake is more consistent with Ahref or Semrush, making comparisons more meaningful.

So net net, Ahref is a better tool for comparisons using this logic. (lower individual variation, more consistent).

To be even more precise, one need to compare by how much, in absolute percentage, the variation varies from the average variation. Using this, you get to the same conclusion: Ahref standard deviation is only 16%. (Semrush 20%, Similarweb 22%). Argh… I keep using Similarweb! Might change horse.

I love this analysis though I do wish you had thrown in one more competitor to SimilarWeb since they are collecting much different data than SEMRush and Ahrefs: Compete.com.

Since SimilarWeb and Compete are direct competitors and run rather expensive API’s it would be nice to know which horse to pick for that real user data.

My website traffic, for the month of Feb 2023

Similar web – 156K

Semrush – 48K

Actual GA – 24K

My site is hosted in Siteground – and it shows Unique Visitors – 54047.

Why there is a huge difference? Is siteground sending my website traffic to another unknown site or just they are eating my site traffic? I have a doubt on them now.

If anyone has any information on it, please exaplain.

Because of the old law of poop in and poop out, and add some wildly speculative estimatation (sorry, proprietary AI driven machine learning blah blah ) in the middle. There’s only one group who could ever give you sensible traffic estimates sans scripts and machine chatter, and that’s the biggest CDNs. Saddly they have not through to tiesthat to their client pool and msociac profiles etc as yet as they’re to busy thinking they’re IT not marketing.

You have my email and address around the corner my Froggie friends :)