Command line interface

Dan Sharp

Posted 19 September, 2018 by Dan Sharp in

Command line interface

You’re able to operate the SEO Spider entirely via command line.

This includes launching, full configuration, saving and exporting of almost any data and reporting. The SEO Spider can also be run headless using the CLI.

This guide provides a quick overview of how to use the command line for the three OS supported, and the arguments available.

Windows

macOS

Linux

Command Line Options

Troubleshooting

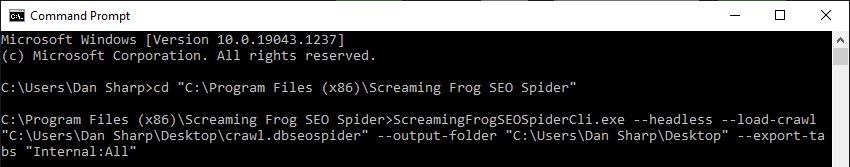

Windows

Open a command prompt (Start button, then type ‘cmd’ or search programs and files for ‘Windows Command Prompt’). Move into the SEO Spider directory (64-bit) by entering:

cd "C:\Program Files (x86)\Screaming Frog SEO Spider"

Or for 32-bit:

cd "C:\Program Files\Screaming Frog SEO Spider"

On Windows, there is a separate build of the SEO Spider called ScreamingFrogSEOSpiderCli.exe (rather than the usual ScreamingFrogSEOSpider.exe). This can be run from the Windows command line and behaves like a typical console application. You can type –

ScreamingFrogSEOSpiderCli.exe --help

To view all arguments and see all logging come out of the CL. You can also type

–-help export-tabs, –-help bulk-export, –-help save-report, or –-help export-custom-summary

To see a full list of arguments available for each export type.

To auto start a crawl:

ScreamingFrogSEOSpiderCli.exe --crawl https://www.example.com

Then additional arguments can merely be appended with a space.

For example, the following will mean the SEO Spider runs headless, saves the crawl, outputs to your desktop and exports the internal and response codes tabs, and client error filter.

ScreamingFrogSEOSpiderCli.exe --crawl https://www.example.com --headless --save-crawl --output-folder "C:\Users\Your Name\Desktop" --export-tabs “Internal:All,Response Codes:Client Error (4xx)”

To load a saved crawl:

ScreamingFrogSEOSpiderCli.exe --headless --load-crawl "C:\Users\Your Name\Desktop\crawl.dbseospider"

The crawl has to be an absolute path to either a .seospider or dbseospider file.

Loading a crawl alone isn’t useful, so additional arguments are required to export data, such as the following to export the ‘Internal’ tab to the desktop.

ScreamingFrogSEOSpiderCli.exe --headless --load-crawl "C:\Users\Your Name\Desktop\crawl.dbseospider" --output-folder "C:\Users\Your Name\Desktop\" --export-tabs “Internal:All”

Please see the full list of command line options available to supply as arguments for the SEO Spider.

macOS

Open a terminal, found in the Utilities folder in the Applications folder, or directly using spotlight and typing: ‘Terminal’.

There are two ways to start the SEO Spider from the command line. You can use either the open command or the ScreamingFrogSEOSpiderLauncher script. The open command returns immediately allowing you to close the Terminal after. The ScreamingFrogSEOSpiderLauncher logs to the Terminal until the SEO Spider exits, closing the Terminal kills the SEO Spider.

To start the UI using the open command:

open "/Applications/Screaming Frog SEO Spider.app"

To start the UI using the ScreamingFrogSEOSpiderLauncher script:

/Applications/Screaming\ Frog\ SEO\ Spider.app/Contents/MacOS/ScreamingFrogSEOSpiderLauncher

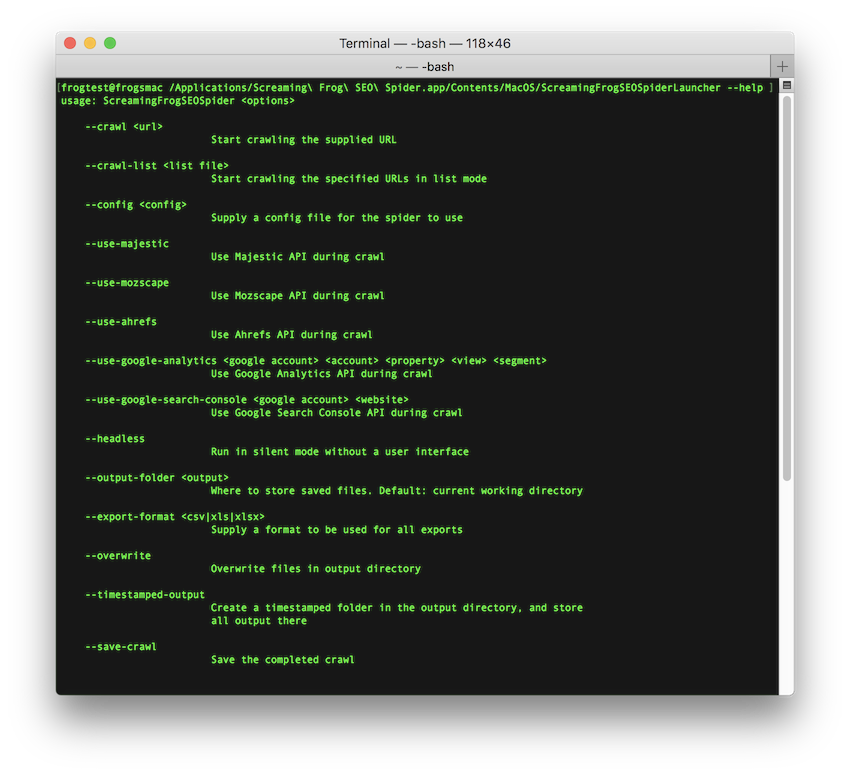

To see a full list of the command line options available:

/Applications/Screaming\ Frog\ SEO\ Spider.app/Contents/MacOS/ScreamingFrogSEOSpiderLauncher --help

The following examples we show both ways of launching the SEO Spider.

To open a saved crawl file:

open "/Applications/Screaming Frog SEO Spider.app" --args /tmp/crawl.seospider

/Applications/Screaming\ Frog\ SEO\ Spider.app/Contents/MacOS/ScreamingFrogSEOSpiderLauncher /tmp/crawl.seospider

To load a saved crawl file and export the ‘Internal’ tab to the desktop.

open "/Applications/Screaming Frog SEO Spider.app" --args --headless --load-crawl "C:\Users\Your Name\Desktop\crawl.dbseospider" --output-folder "C:\Users\Your Name\Desktop\" --export-tabs “Internal:All”

/Applications/Screaming\ Frog\ SEO\ Spider.app/Contents/MacOS/ScreamingFrogSEOSpiderLauncher --headless --load-crawl "/Users/Your Name/Desktop/crawl.dbseospider" --output-folder "/Users/Your Name/Desktop" --export-tabs "Internal:All"

To start the UI and immediately start crawling:

open "/Applications/Screaming Frog SEO Spider.app" --args --crawl https://www.example.com/

/Applications/Screaming\ Frog\ SEO\ Spider.app/Contents/MacOS/ScreamingFrogSEOSpiderLauncher --crawl https://www.example.com/

To start headless, immediately start crawling and save the crawl along with Internal->All and Response Codes->Client Error (4xx) filters:

open "/Applications/Screaming Frog SEO Spider.app" --args --crawl https://www.example.com --headless --save-crawl --output-folder /tmp/cli --export-tabs "Internal:All,Response Codes:Client Error (4xx)"

/Applications/Screaming\ Frog\ SEO\ Spider.app/Contents/MacOS/ScreamingFrogSEOSpiderLauncher --crawl https://www.example.com --headless --save-crawl --output-folder /tmp/cli --export-tabs "Internal:All,Response Codes:Client Error (4xx)"

Please see the full list of command line options available to supply as arguments for the SEO Spider.

Linux

This screamingfrogseospider binary is placed in your path during installation. To run this open a terminal and follow the examples below.

To start normally:

screamingfrogseospider

To open a saved crawl file:

screamingfrogseospider /tmp/crawl.seospider

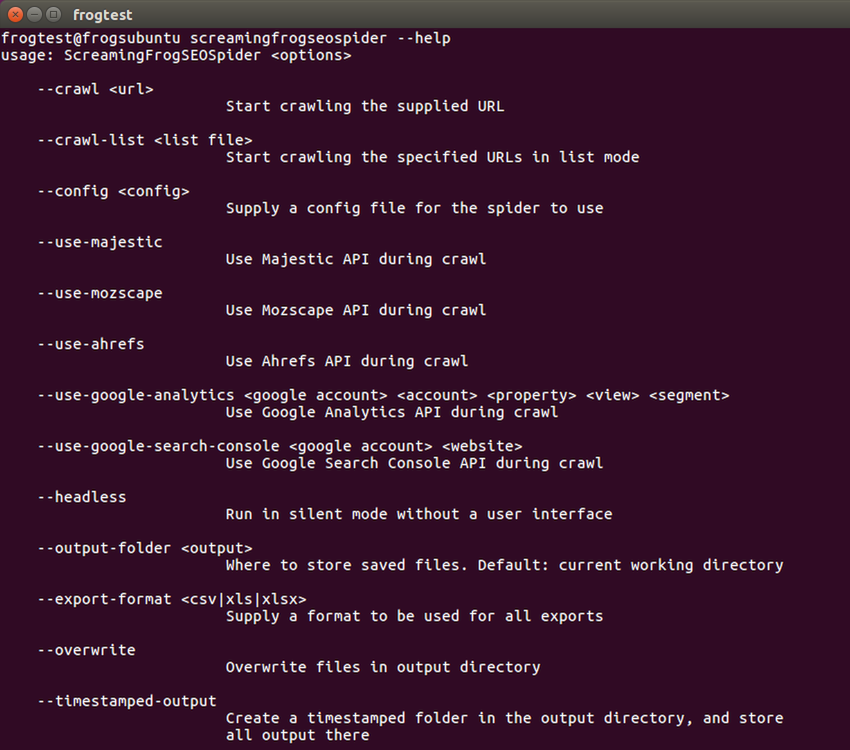

To see a full list of the command line options available:

screamingfrogseospider --help

To start the UI and immediately start crawling:

screamingfrogseospider --crawl https://www.example.com/

To start headless, immediately start crawling and save the crawl along with Internal->All and Response Codes->Client Error (4xx) filters:

screamingfrogseospider --crawl https://www.example.com --headless --save-crawl --output-folder /tmp/cli --export-tabs "Internal:All,Response Codes:Client Error (4xx)"

To load a saved crawl file and export the ‘Internal’ tab to the desktop.:

screamingfrogseospider --headless --load-crawl ~/Desktop/crawl.seospider --output-folder ~/Desktop/ --export-tabs "Internal:All"

In order to utilize JavaScript Rendering when running headless in Linux a display is required. You can use a Virtual Frame Buffer to simulate having a display attached. See full details here.

Please see the full list of command line options below.

Command Line Options

Please see the full list of command line options available to supply as arguments for the SEO Spider by using –-help in the app, as well as our list below.

Supply ‘–-help export-tabs’, ‘–-help bulk-export’, ‘–-help save-report’, or ‘–-help export-custom-summary’ to see a full list of arguments available for each export type.

Start crawling the supplied URL.

--crawl https://www.example.com

Start crawling the specified URLs in list mode.

--crawl-list "list file"

Load a saved .seospider or .dbseospider crawl. Example: –load-crawl “C:\Users\Your Name\Desktop\crawl.dbseospider”.

--load-crawl "crawl file"

Load a database crawl. The crawl ID is available in ‘File > Crawls’ and using right click ‘Copy Database ID’. Example: –load-crawl “65a6a6a5-f1b5-4c8e-99ab-00b906e306c7”.

--load-crawl "database crawl ID"

Alternatively, it is possible to view database crawl IDs in the CLI.

--list-crawls

Supply a saved configuration file for the SEO Spider to use. Example: –config “C:\Users\Your Name\Desktop\supercool-config.seospiderconfig”.

--config "config"

Supply a saved authentication configuration file for the SEO Spider to use. Example: –auth-config “C:\Users\Your Name\Desktop\supercool-auth-config.seospiderauthconfig”.

--auth-config "authconfig"

Run in silent mode without an user interface.

--headless

Save the completed crawl.

--save-crawl

Store saved files. Default: current working directory. Example: –output-folder “C:\Users\Your Name\Desktop”.

--output-folder "output"

Overwrite files in output directory.

--overwrite

Set the project name to be used for storing the crawl in database storage mode and for the folder name in Google Drive. Exports will be stored in a ‘Screaming Frog SEO Spider’ Google Drive directory by default, but using this argument will mean they are stored within ‘Screaming Frog SEO Spider > Project Name’. Example: –project-name “Awesome Project”.

--project-name "Name"

Set the task name to be used for the crawl name in database storage mode. This will also be used as folder for Google Drive, which is a child of the project folder. For example, ‘Screaming Frog SEO Spider > Project Name > Task Name’ when supplied with project name. Example: –task-name “Awesome Task”

--task-name "Name"

Create a timestamped folder in the output directory, and store all output there. This will also create a timestamped directory in Google Drive.

--timestamped-output

Supply a comma separated list of tabs and filters to export. You need to specify the tab and the filter name separated by a colon. Tab names are as they appear on the user interface, except for those configurable via Configuration->Spider->Preferences where X is used. Eg: Meta Description:Over X Characters. Example: –export-tabs “Internal:All”.

--export-tabs "tab:filter,..."

Supply a comma separated list of bulk exports to perform. The export names are the same as in the Bulk Export menu in the UI. To access exports in a submenu, use ‘Submenu Name:Export Name’. Example: –bulk-export “Response Codes:Internal & External:Client Error (4xx) Inlinks”.

--bulk-export "submenu:export,..."

Supply a comma separated list of reports to save. The report names are the same as in the Reports menu in the UI. To access reports in a submenu, use ‘Submenu Name:Report Name’. Example: –save-report “Redirects:All Redirects”.

--save-report "submenu:report,..."

Supply a comma separated list of items for the ‘Export For Data Studio’ Custom Crawl Overview report for automated Data Studio crawl reports.

The configurable report items for the custom crawl overview can be viewed in the CLI using ‘–help export-custom-summary’ and are the same names in the scheduling UI when in English. This requires you to also use your Google Drive Account. Example: –export-custom-summary “Site Crawled,Date,Time” –google-drive-account “yourname@example.com”.

--export-custom-summary "item 1,item 2,item 3..."

Creates a sitemap from the completed crawl.

--create-sitemap

Creates an images sitemap from the completed crawl.

--create-images-sitemap

Supply a format to be used for all exports. For Google Sheet, you’ll need to supply your Google Drive account as well. Example: –export-format csv.

--export-format csv xls xlsx gsheet

Use a Google Drive account for Google Sheets exporting. Example: –google-drive-account “yourname@example.com”.

--google-drive-account "google account"

Use the Google Universal Analytics API during crawl. Please remember the quotation marks as shown below. Example: –use-google-analytics “yourname@example.com” “Screaming Frog” “https://www.screamingfrog.co.uk/” “All Web Site Data” “Organic Traffic”.

--use-google-analytics "google account" "account" "property" "view" "segment"

Use the Google Analytics 4 API during crawl. Please remember the quotation marks as shown below. Example: –use-google-analytics-4 “yourname@example.com” “Screaming Frog” “Screaming Frog – GA4” “All Data Streams”.

--use-google-analytics-4 "google account" "account" "property" "data stream"

Use the Google Search Console API during crawl. Example: –use-google-search-console “yourname@example.com” “https://www.screamingfrog.co.uk/”

--use-google-search-console "google account" "website"

Use the PageSpeed Insights API during crawl.

--use-pagespeed

Use the Majestic API during crawl.

--use-majestic

Use the Mozscape API during crawl.

--use-mozscape

Use the Ahrefs API during crawl.

--use-ahrefs

View this list of options.

--help

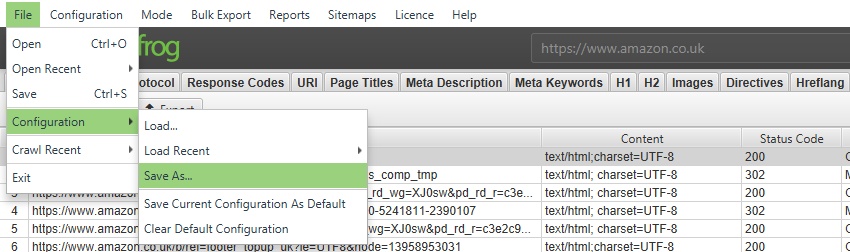

Use Saved Configurations

If a feature or configuration option isn’t available as a specific command line option outlined above (like the exclude, or JavaScript rendering), you will need to use the user interface to set the exact configuration you wish, and save the configuration file.

You can then supply the saved configuration file when using the CLI to utilise those features.

Troubleshooting

- If a headless crawl fails to export any results make sure that –output-folder exists and is either empty or you are using the –timestamped-output option.

- When quoting an argument don’t finish with a \ before the quote as it will be escaped.