How To Audit Backlinks In The SEO Spider

Dan Sharp

Posted 7 September, 2016 by Dan Sharp in Screaming Frog SEO Spider

How To Audit Backlinks In The SEO Spider

There are plenty of reasons you may wish to audit backlinks to a website, whether it’s to check the links are still live and passing link value, they’ve been removed or nofollowed after a link clean up, or you want to get more data on the links which Google Search Console doesn’t provide.

We previously wrote a guide back in 2011 on using the custom search feature to audit backlinks at scale, by simply searching for the presence of the link within the HTML of the website.

Since that time, we’ve released our custom extraction feature, which allows this process to be refined further and provide more detail. Rather than just check for the link, you can now collect all links, anchor text, and analyse whether they are passing value or have been blocked by robots.txt, or nofollowed, via link attribute, meta or X-Robots-tag.

Please note, the custom extraction feature outlined below is only available to licensed users. The steps to auditing links using custom extraction are as follows.

1) Configure XPath Custom Extraction

Open up the SEO Spider. In the top level menu, click on ‘Configuration > Custom > Extraction’ and input the following XPath below, but replace ‘screamingfrog.co.uk’ with the domain you’re auditing.

The XPath in text form for ease of copying and pasting into the custom extractors –

//a[contains(@href, 'screamingfrog.co.uk')]/@href

//a[contains(@href, 'screamingfrog.co.uk')]

//a[contains(@href, 'screamingfrog.co.uk')]/@rel

This XPath will collect every link, anchor text and link attribute to screamingfrog.co.uk from the backlinks to be audited. So if there are multiple links to the domain you’re auditing from a backlink, all of the data will be collected.

2) Switch To List Mode

Next, change the mode to ‘list’, by clicking on ‘Mode > List’ from the top level menu.

This will allow you to upload your backlinks into the SEO Spider, but don’t do this just yet!

3) View URLs Blocked By Robots.txt

When switching to list mode, the SEO Spider assumes you want to crawl every URL in the list and automatically applies the ‘ignore robots.txt’ configuration. However in this scenario, you may find it useful to know if a URL is blocked by robots.txt when auditing backlinks. If so, navigate to ‘Configuration > Spider > Basic tab’, un-tick ‘Ignore robots.txt’ and tick ‘Show Internal URLs Blocked by robots.txt’.

You’ll then be able to view exactly which URLs are blocked by robots.txt in the crawl.

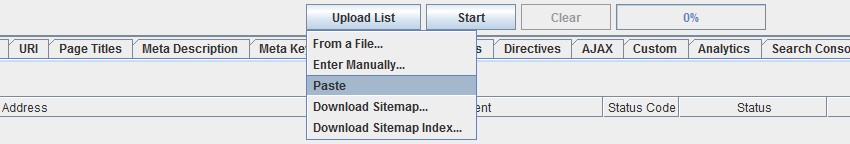

4) Upload Your Backlinks

When you have gathered the list of backlinks you wish to audit, upload them by clicking on the ‘Upload List’ button and choosing your preference for uploading the URLs. The ‘Paste’ functionality makes this super quick.

Please note, you must upload the absolute URL including protocol (http:// or https://) in list mode.

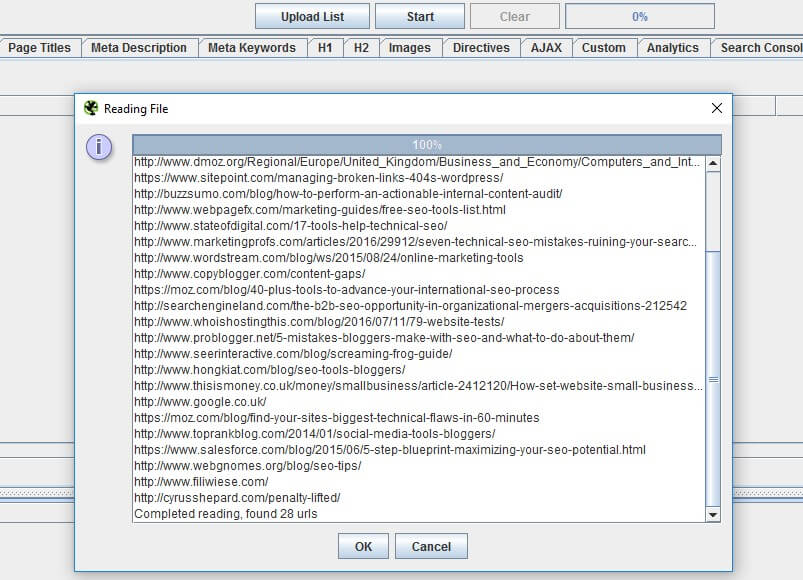

5) Start The Crawl

When you’ve uploaded the URLs, the SEO Spider will show a reading file dialog box and confirm the number of URLs found. Next click ‘OK’, and the crawl will start immediately.

You’ll then start to see the backlinks being crawled in real-time in the ‘Internal’ tab.

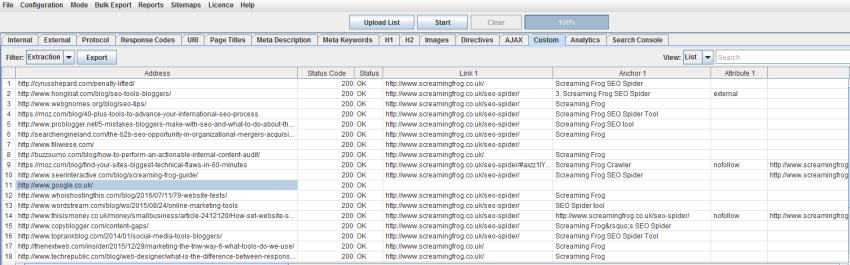

6) Review The Extracted Data

View the ‘Custom’ tab and ‘Extraction’ filter to see the data extracted from the backlinks audit.

You can drag and drop the columns to your preference. If there are multiple links to the domain you’ve audited from some external sites, then you will see multiple columns for link, anchor and attribute which are numbered, so they can be matched up.

This view shows whether the URLs exist, their status (including whether they are blocked by robots.txt), the link, anchor and the link attribute. It doesn’t show whether the URL has a ‘nofollow’ from a meta tag or HTTP Header, which can be seen under the ‘Internal’ tab, where all the custom extraction data is automatically appended for ease as well.

The SEO Spider wasn’t built with this purpose in mind, so it’s not a perfect solution, however, the SEO Spider gets used in so many different ways and this should make the process of checking backlinks a little less tedious.

Absolutely love this. While there may be other tools you’d deem “less tedious” this is precisely the method we were looking for with one notable API integration I think has made it to the product roadmap. We’ll see :) In any event, while there are other methods out there which will poll for the presence of a link, etc. The raw power the custom extractor represents and the utility of it dwarfs the other methods IMHO. Keep on keeping on guys. You’re doing fabulously well!

Hey Gerald,

Thanks for the kind comments and very cool to hear you’re enjoying the power of the extractors :-)

Cheers.

Dan

I’m attempting to follow these steps but I am never seeing any results from step 1. There is nothing in my Custom tab, extraction filter. Any assistance is appreciated.

Hey April,

Pop through to us at support with some screenshots of what you have done, and we can help –

https://www.screamingfrog.co.uk/seo-spider/support/

Cheers.

Dan

Very nice, a significant improvement in this update.

Well,

This is a really good functionality of the tool. Thanks for the write up.

I have a question, or rather, a request for updated versions of SFSS.

When you crawl a website and click an URL you get the different tabs with information. Like inlinks and outlinks. Can those rows have an additional column in front, with the row number? This makes it easy to see the number of in- and outlinks :)

Best,

Dennis

Hey Dennis,

Apologies for the delayed reply. I see where you’re coming from, thanks for the suggestion!

Cheers.

Dan

Hi Dan,

Thanks for replying.

If this suggestion is possible to built in, great. Otherwise, still love the tool :)

Cheers

Hello,

Excellent article indeed. From next time I will use this tool for auditing backlinks. Thanks so much sharing this outstanding article.

Thanks,

Moumita Ghosh

Thanks for sharing this information. Its really helpful for people like me :)

Screaming frog at its best.. This feature has now impressed me. Would surely try out your premium version now..

That’s an awesome feature and a really well written and detailed guide to boot. Well done guys, colour me impressed!

Thanks Dan these days we need to make sure our backlink profile is as good as it can be.

Although I found the guide really useful, I think that steps two and three should be consolidated in a single step. In my opinion, the way the guide is currently structured, makes the whole process seem a bit more complicated than it is and that may put some users off.

hi, i followed these steps and everything is fine, but when i check with other tools i can find the nofollow links but, screaming frog doesnt dispaly any nofollow links… is there any solution for that ?

hi.

1. How to get all internal links from list of pages.

Example:

10.000 categories

Every have 1000 subcats

How to get all subcats? Program can’t parse this number of pages.

Good idea is get all links from this 10K pages.

Custom extraction not work:Error, too big size of cell, over 32K.

2. How to get list of uncrowled links (remaining links) -please can you add an export of this data?

Hi Pavel,

Thanks for the comment.

1) You don’t need to use custom extraction to get data for internal links, it will do that by default anyway. Have a look at some of the ‘bulk export’ options, like all inlinks / outlinks etc. Any specific queries, you can pop through to support here – https://www.screamingfrog.co.uk/seo-spider/support/

2) That’s a good idea, and one we are considering to introduce in an update. Thanks for the feedback.

Cheers.

Dan

Is there any trial version available?

If yes, kindly share me the details.

Hi Riddhi,

There’s a limited free version available which will crawl up to 500 URLs, and you can download here – https://www.screamingfrog.co.uk/seo-spider/

Cheers.

Dan

Excellent, didn’t realise there was a free version! Thanks

This is such a great feature. Screaming Frog makes it so easy for me to analyze my site, my clients sites, and competitors sites. I’ll keep my eyes open for any and all updates!

Java crashes on crawling 2Mln+ urls. Tested on extremely fast server PC. :(

Looks it need some optimization in algo. Or maybe change engine.

Its unnormaly when text info of few millions pages cannot be saved on terrabytes disks. :) Please help.

Hi Ober,

This isn’t a surprise, the SEO Spider is optimal for crawling sub 500k URLs really.

Hence my advice is to upload the URLs in batches!

Cheers.

Dan

This is actually a great tool, especially for people who have pretty old domains and some of their backlinks have been removed or are no follow. In general it’s useful to have it for regular backlinks check and monetization, The tool is user friendly and the guide is well written and pretty explanatory. Good job.

Just love how easy it is to use the spider to check for links. I used to get things like that all the time, you start to lose your position in Google, and soon enough when you check the links you find that hundreds of links have been deleted.

Thanks for the article :)

A day without using ScreamingFrog is a day lost! Thank you for guidance on using extractions, awesome feature.

Thanks, a lot for this instructions. I just downloaded screaming frog and this article help me to do first backlinks analysis.

Awesome tutorial! Very helpful. I was wondering, does the tool have free trial version? Would love to try it out.

Thanks!. Awesome tutorial! Very helpful.

Very interesting article. I find it hard to manually check backlinks. Tried Seo Spider and it seems very useful. Cheers.

Thanks for the awesome tool so I can audit my backlink profile, I have 6 different files to look through and your audit tool will help save on time, and it’s free, yes i love free, keep up the good work.

Screaming frog is a very helpful tool.

As a SEO I use this software almost 80% of my time when I need to check URLs.

One of the best options.

Hi,

Is it possible to somehow evaluate the quality of the backlinks through Screamingfrog?

In other words, is it possible to integrate with Majestic or Moz?

Regards,

Pat

Hi Patrizio,

You can connect to the Majestic, Moz or Ahrefs APIs and pull in link data against URLs in a crawl.

More info here –

https://www.screamingfrog.co.uk/seo-spider/user-guide/configuration/#majestic

https://www.screamingfrog.co.uk/seo-spider/user-guide/configuration/#ahrefs

https://www.screamingfrog.co.uk/seo-spider/user-guide/configuration/#moz

Cheers.

Dan

Cool! Thanks :-)

Wow am I late to this party (years late in fact)!

I love this post and it really got me thinking, is there anything that can be done to make the XPath a bit more accurate?

The problem with XPath like:

//a[contains(@href, ‘screamingfrog.co.uk’)]/@href

… is that it would match to backlinks like this one:

[http/https]://mycoolalexascraper/screamingfrog.co.uk/results

You know those spammy scraper sites that just link to everyone!? And they have your domain within their own URL structure, as they list your ‘results’ for your website. As such, using ‘contains’ is a bit dangerous

Really the “screamingfrog.co.uk” part has to be very near the beginning of the href attribute value

You could do something like this:

//a[starts-with(@href, ‘https://screamingfrog.co.uk’)]/@href | //a[starts-with(@href, ‘http://screamingfrog.co.uk’)]/@href | //a[starts-with(@href, ‘https://www.screamingfrog.co.uk’)]/@href | //a[starts-with(@href, ‘http://www.screamingfrog.co.uk’)]/@href

That covers the four main possible URL permutations. Your link can start with HTTP/domain, HTTPS/domain, HTTP/WWW/domain or HTTPS/WWW/domain. Just because you have one canonical structure, that doesn’t mean that spam-hat webmasters will use it when they link to you…

The pipes make or statements (sort of, I think it’s kind of a node separator) which allow one single line of XPath to match multiple patterns which is quite handy, but I’m also pretty sure it could be written in a much more elegant way

What do you think?

Is this backlinks feature available only in paid version? cant i get sample backlinks report in free version before i purchase the versions?

The SEO Spider doesn’t crawl the whole web to provide backlink data.

You’ll need to use a backlink analyser to have your list of backlinks (Ahrefs, Moz Link Explorer, Majestic).

Thanks,

Dan

I have used many tools so far, nobody has the features like screaming frog that too in a single license/ No monthly fee. But if you add the backlink audit tool such moz, ahref, you will be the king of all SEO tools.

Screamingfrog has saved my ass.

Does not work, if you are check links, that go to a page with a permanent 301. So if you have changed your url structure…. shows the link as being missing.

Indispensable tool for our recent site move. Tried several others. None as flexible especially these features. Gets better with every version. Love it!!

Hey, SF the ignore robots.txt is no longer in Config > Spider > Basic Although there is no Basic tab so what is the alternative in the latest version?

Hi Allison,

It’s under ‘Config > Robots.txt > Settings’ now :-)

Cheers,

Dan