Announcing The Screaming Frog Log File Analyser

Dan Sharp

Posted 11 April, 2016 by Dan Sharp in Screaming Frog Log File Analyser

Announcing The Screaming Frog Log File Analyser

I am delighted to announce the release of the Screaming Frog Log File Analyser 1.0, codenamed ‘Pickled Onions’.

Since the release of the SEO Spider, we planned to build a Log File Analyser, and finally started on development last year. While crawling is a wonderful thing, analysing your log files directly from the server allows you to see exactly what the search engines have experienced, over a period of time.

The Screaming Frog Log File Analyser provides an overview of key data from a log file, and allows you to dig deeper.

I don’t think I need to preach the benefits of analysing log files to most SEOs, but if you’re in any doubt or would like more insight, then I highly recommend the log file analysis guide from the team at BuiltVisible.

Unfortunately, the process of analysing log files has always been a pain, due to the difficulties of getting access to logs, their format and huge size. Often SEOs use GREP to collect raw Googlebot log lines and analyse in a spreadsheet, or use log solutions which were not really built with SEO in mind. There’s open source log monitoring software (such as Elastic’s ELK stack), but getting clients to configure and implement them can be like trying to get blood from a stone.

The Screaming Frog Log File Analyser has been developed to make the process less painful and to be able to quickly gain insight into search bot behaviour for SEOs, without requiring complicated configuration. It’s also free for importing up to 1,000 log events in a single project. If you’d like to import and analyse more events, then you can purchase a licence for just £99 per year.

Drag & Drop Your Log Files

You can simply drag and drop very large log files directly into the interface, and the Log File Analyser will automatically detect the log file format and compile the data into a local database, able to store millions of log events. Multiple logs or folders can be dragged in at a time, they can be zipped/gzipped and from any server, whether Apache, ISS or NGINX for example.

At the moment an ‘All Bots’ project will as default compile data for Googlebot, Bingbot, Yandex and Baidu, which we plan to make configurable in a later version. You can switch user-agent to view specific bots only, via the dropdown in the top right next to the date range. You can read more about setting up a new project in our user guide.

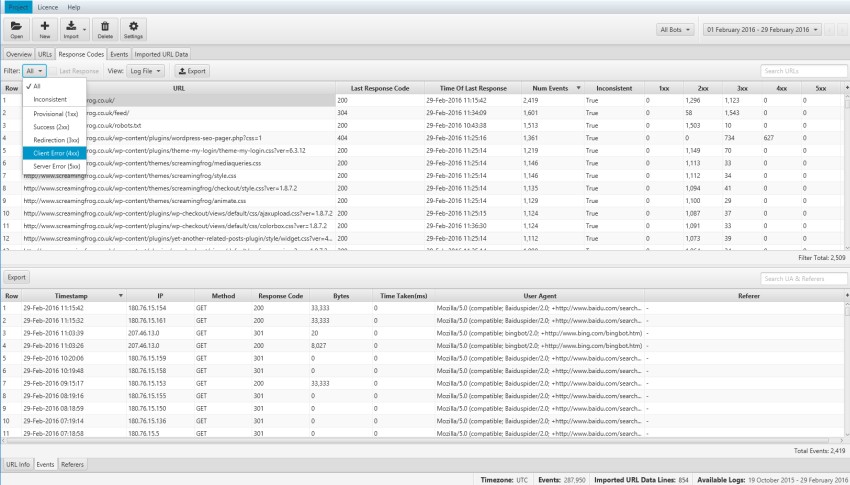

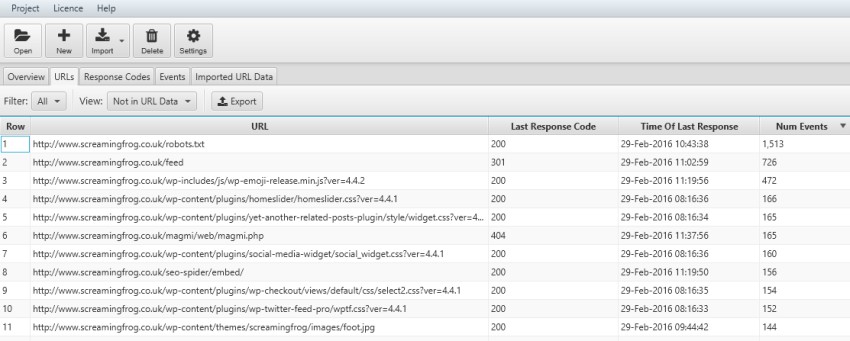

Identify Crawled URLs & Analyse Frequency

You can view exactly which URLs have been crawled by the search engines and analyse your most frequently crawled pages or directories by search bot user-agent.

The Log File Analyser allows you to analyse, segment, filter, sort, search and export the data as well.

While data is aggregated around attributes to aid analysis, the raw log events from the log file are stored and accessible, too. The ‘events’ tab at the bottom shows all raw events for a particular URL, while the ‘Events’ tab at the top includes the raw log event lines with all attributes. You can browse 10 million log events in a single list for example, which would break most things.

Find Client & Server Errors

You can quickly analyse responses the search engines have experienced over time. The Log File Analyser groups events into response code ‘buckets’ (1XX, 2XX, 3XX, 4XX, 5XX) to aid analysis for inconsistency of responses, as well as providing filters for response codes. You can choose whether to view URLs with a 4XX response from all events over the time period, or just the very last response code for example.

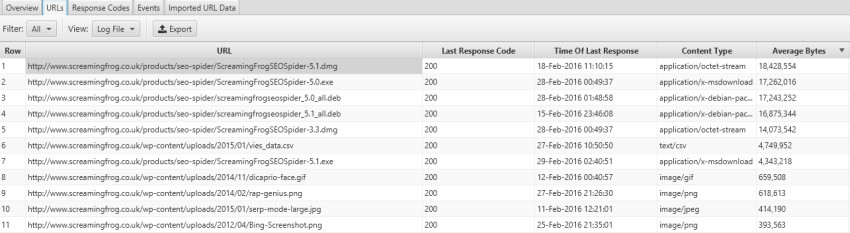

Identify Large & Slow URLs

The Log File Analyser shows you the average bytes downloaded and time taken for search bots for every URL requested, so it’s easy to identify large pages or performance issues. You can simply sort the database in the interface!

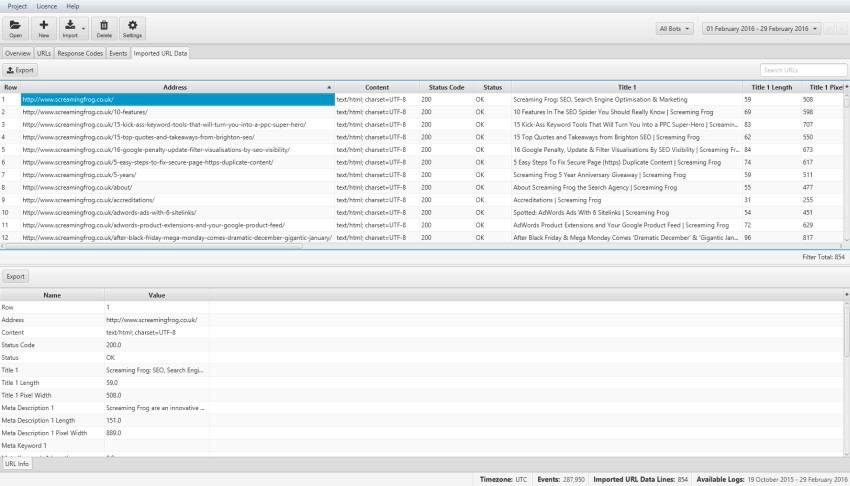

Import A Crawl or Other Data For More Insight

The ‘Imported URL Data’ tab allows you to also import crawl data into the Log File Analyser. You can just drag and drop the ‘internal’ tab export from the Screaming Frog SEO Spider for example.

You can actually import any data you like into the database, with a ‘URLs’ heading and data next to it in columns. The Log File Analyser will automatically match up the data to URLs. So if you wanted to import multiple files, say a crawl and a ‘top pages’ report from Majestic or OSE, then this tab will work like VLOOKUP and match them up for you.

You don’t actually need any log files to use this feature and it’s completely unlimited. So if you’re a bit rubbish in Excel and want to match up data with common URLs, simply drag and drop multiple files into this window and then export the combined data.

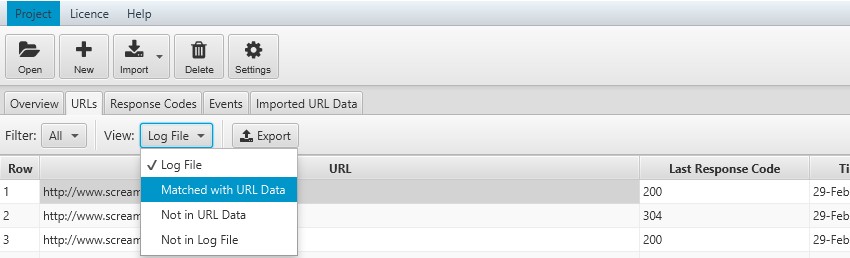

Once you have imported the data, ‘view’ filters then become available on the ‘URLs’ and ‘Response Codes’ tabs, which allow you to see log file data with crawl data for more powerful insight.

The ‘Not In URL Data’ and ‘Not In Log File’ filters help you discover orphan URLs (URLs not in a crawl, but have been requested by search bots), or URLs which exist in a crawl, but haven’t actually been crawled by the search engines.

This feature has so much potential, as you could combine any data for more insight, such as analytics, search query, and external link data for example. We plan on including more sophisticated search functions to help analyse within the tool.

Lots More To Come

We’re really excited by the potential of the tool. It’s in its infancy at the moment, but there’s already lots in the development queue, including configurable user-agents, automatic verification of Googlebot and much more.

As it’s a new tool, there will be some bugs, so please do just get in touch with us via our support if you have any issues or if there are any logs which won’t upload.

We would also love to hear your feedback and suggestions for the Log File Analyser. We plan on releasing regular updates, in a similar way as our SEO Spider tool. If you’d like to read more about the Log File Analyser, please see our user guide and FAQ, which will be updated over time.

Thanks to everyone in the SEO community for all your support, and in particular our awesome beta testers who provided such amazing feedback. We hope the tool proves useful!

Download & Trial

You can download the Screaming Frog Log File Analyser now and import up to 1k log events for free at a time.

We even provide a demo log file for you to play with if you don’t have one to hand. Enjoy!

Small Update – Version 1.1 Released 13th April 2016

We have just pushed a small update to version 1.1 of the Screaming Frog Log File Analyser. This release includes mainly bug fixes. The full list includes –

- Fixed a bug when importing log files that require a site URL in non English locales.

- Fixed a bug with auto discovering fields on import.

- Fixed a bug where quitting during project creation led to corrupt projects.

- The last open project is not reset if the app crashes.

Small Update – Version 1.2 Released 20th April 2016

We have just released another small update to version 1.2 of the Log File Analyser. This release includes –

- Improved auto discovery of log file format on import.

- Fixed crash when opening a project, on log file import, when mistakenly importing a trace file and canceling project creation on Windows.

- Fix for drag and drop from WinRAR causing blank filenames.

- Fix for issue with parsing of W3C log files that are delimited on tabs instead of spaces.

Small Update – Version 1.3 Released 3rd May 2016

We have just released another small update to version 1.3 of the Log File Analyser. This release includes –

- Support for Amazon CloudFront double percent encoded user agent fields.

- Fix for response code 499 causes log files not to be read.

- Fix for importing Apache logs with IPv6 hostnames.

- Fix for importing Apache logs with a duplicate field REQUEST_LINE.

- Support for logfiles in reverse chronological order.

- Fixed crash in progress dialog.

Small Update – Version 1.4 Released 16th May 2016

We have just released another small update to version 1.4 of the Log File Analyser. This release includes –

- Fix for file in use by another process warning on Windows, that when importing the same file again incorrectly detected as a valid domain.

- User Agent detection fails to recognised Googlebot-Image/1.0.

Small Update – Version 1.5 Released 25th July 2016

We have just released another small update to version 1.5 of the Log File Analyser. This release includes –

- Fix for Windows users unable to launch with Java 8 update 101.

- Reduced the number of mandatory fields required to import a log file.

- Improved performance of ‘Not In Log File / Not In URL Data’ filters.

- Fixed several crashes when importing invalid log files.

Small Update – Version 1.6 Released 17th August 2016

We have just released another small update to version 1.6 of the Log File Analyser. This release includes –

- Fix for out of memory error when importing large Excel files.

- Linux version can now be run with openjdk and openjfx packages instead of oracles JRE.

- tar files can now be extracted, previously this had to be done outside of the LFA.

- Individual request line components for Apache log files now supported.

- IIS Advanced Logging Module W3C format now supported.

- W3C mid file format changes now supported.

- W3C x-host-header and cs-protocol now supported which allows multiple site urls to be read from W3C files if these are present.

Small Update – Version 1.7 Released 8th November 2016

We have just released another small update to version 1.7 of the Log File Analyser. This release includes –

- Fixed issue with graphs displaying without colour.

- Recently used projects list when no project is open.

- Minimise the number of fields required to import a log file.

- Support for Nginx request_time field.

- Support for W3C cs-uri and cs-host fields.

- Improved import performance.

- Improved performance when comparing with URL data.

- Handling of mid file format changes.

- Fixes for several crashes when faced with corrupt or invalid log files.

- Fixed issue with not being able URL Data files with data type changes.

Small Update – Version 1.8 Released 20th December 2016

We have just released another small update to version 1.8 of the Log File Analyser. This release includes –

- Support for W3C date-local and time-local fields.

- Prevent restoring to position off screen.

- Better detection of file types.

- Fix: Corrupt project when importing URL Data.

- Fix: Unable to import Apache log with response code but no response bytes field.

- Fix: Unable to parse IIS URI’s with spaces.

- Fix: OS X launcher gives hard to understand message about updating Java.

- Fix: Progress dialog appears over site url dialog preventing import.

- Fix: Crash on importing bad zip file.

Great news! Now, we have to test it and play with it ;) Thanks!

Thanks Miguel, hope you find it useful.

Priceless tool for professional use. One of the best tools for SEO Specialists.

Is there a max file size for the app?

Feature Request!

Ability to sftp to server to view log files.

Cheers B.

No max file size, but the larger the file, the longer the import and time it takes to generate the data/views. If you have any issues, ping through the details to support.

Thanks for the feature request as well, we have that in our development queue to consider.

Dan

Great Job Dan and Team Screaming Frog. It was about time we had a tool like Screaming Frog to play with this data. Who else could do it better than the folks who built screaming frog. Now that you guys have done it, it feels so natural and obvious. Kudos to you guys. Downloading it now to play with it.

Thanks Nakul!

Really really good.

Super-Feature Request!

ability to connect and synchronize directly to the server AND

ability to extract the URL/directory crawl-rate in a configurable period of time AND

ability to set automatic alerts (via email ) when the crawl-rate of a URL/directory varies by a configurable percentage

Hi Piersante,

Three superb suggestions, thank you.

We had the first couple on the list already and I’ll add the other :-)

Cheers.

Dan

Cool, I can’t wait to do my first analysis :)

Hope you like it Steven!

This is a really awesome new tool, very impressed! Love the clean user interface and ease of use compared to some of the other tools I was using before.

Cheers Chris!

VERY handy tool! I have a blog post coming soon with some case studies on how you can use this to improve your organic performance :)

Osum! Now no more excel and formulas to get the insights of Log Files.

Great app! Definitely an essential app nowadays for professionals!

The software provides data involves seeing, thank you very much!

Great Tool,

as specialized on technical SEO I will integrate it in our processes. Let us easy check which sites the Googlebot crawls.

regards,

John

Lots of really awesome improvements going on! Good job!

This is wicked! I imagine a lot of blog posts are on the way…

I love this tool.

Thank you team screamingfrog

Awesome tool and greats news! Thanks for the job. I love screaming frog :)

Wow, this is fantastic. We’re putting so much more effort into crawl budget and efficiency than even 12 months ago. For me, the hardest part has been measuring these trends with anything truly worthwhile.

Your SEO Spider is easily the best £99 we ever spent and this is likely the next purchase on the list – time to download the trial!

I appreciate your efforts. For me, ScreamingFrog is a very useful tool.

Thanks to the developers!

I love this tool.

Thank you team Screaming Frog!

Great tool! I found it very useful! Thanks for the information ;)

I like a lot all the fantastic improvements you’re developing.

Congratulations!

Your tools work great for me – especially the spider.

What I’d like to see in the logfile analyser is a chart (like the ones in the overview panel) that shows me the events/time for a single URL. So I wouldn’t have to export the data to Excel to draw it there. Oder did I just miss this?

Very useful tool for Business and eCommerce Sites. It becomes easy to analyze metas, images, heading tags, and page`s status for every site with Screaming Frog.

Screaming Frog`s all tools are my favorite especially SEO Spider. I daily use it for website analytics.

We find this tool really helpful for our everyday use. We also love the support that we get with the product. We’ll definitely be long-term users of this product.

A super tool that cannot be missing in our repository