Authentication

Dan Sharp

Posted 12 December, 2016 by Dan Sharp in

Authentication

Configuration > Authentication

The SEO Spider supports two forms of authentication, standards based which includes basic and digest authentication, and web forms based authentication.

Check out our video guide on how to crawl behind a login, or carry on reading below.

Basic & Digest Authentication

There is no set-up required for basic and digest authentication, it is detected automatically during a crawl of a page which requires a login. If you visit the website and your browser gives you a pop-up requesting a username and password, that will be basic or digest authentication. If the login screen is contained in the page itself, this will be a web form authentication, which is discussed in the next section.

Often sites in development will also be blocked via robots.txt as well, so make sure this is not the case or use the ‘ignore robot.txt configuration‘. Then simply insert the staging site URL, crawl and a pop-up box will appear, just like it does in a web browser, asking for a username and password.

Enter your credentials and the crawl will continue as normal.

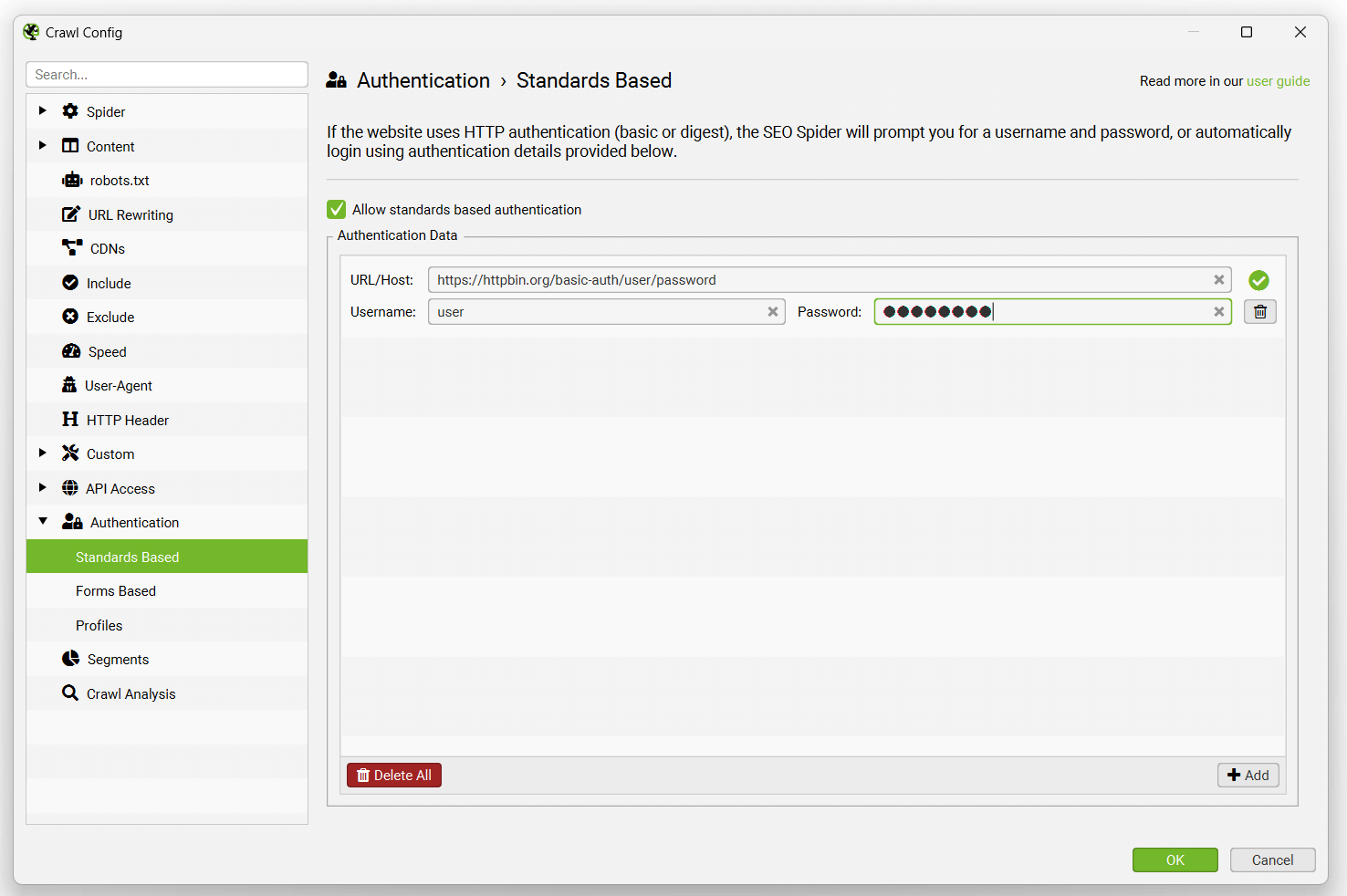

Alternatively, you can pre-enter login credentials via ‘Config > Authentication’ and clicking ‘Add’ on the Standards Based tab.

Then input the URL, username and password.

When entered in the authentication config, they will be remembered until they are deleted.

This feature does not require a licence key. Try to following pages to see how authentication works in your browser, or in the SEO Spider.

- Basic Authentication Username:user Password: password

- Digest Authentication Username:user Password: password

Web Form Authentication

There are other web forms and areas which require you to login with cookies for authentication to be able to view or crawl it. The SEO Spider allows users to log in to these web forms within the SEO Spider’s built in Chromium browser, and then crawl it. This feature requires a licence to use it.

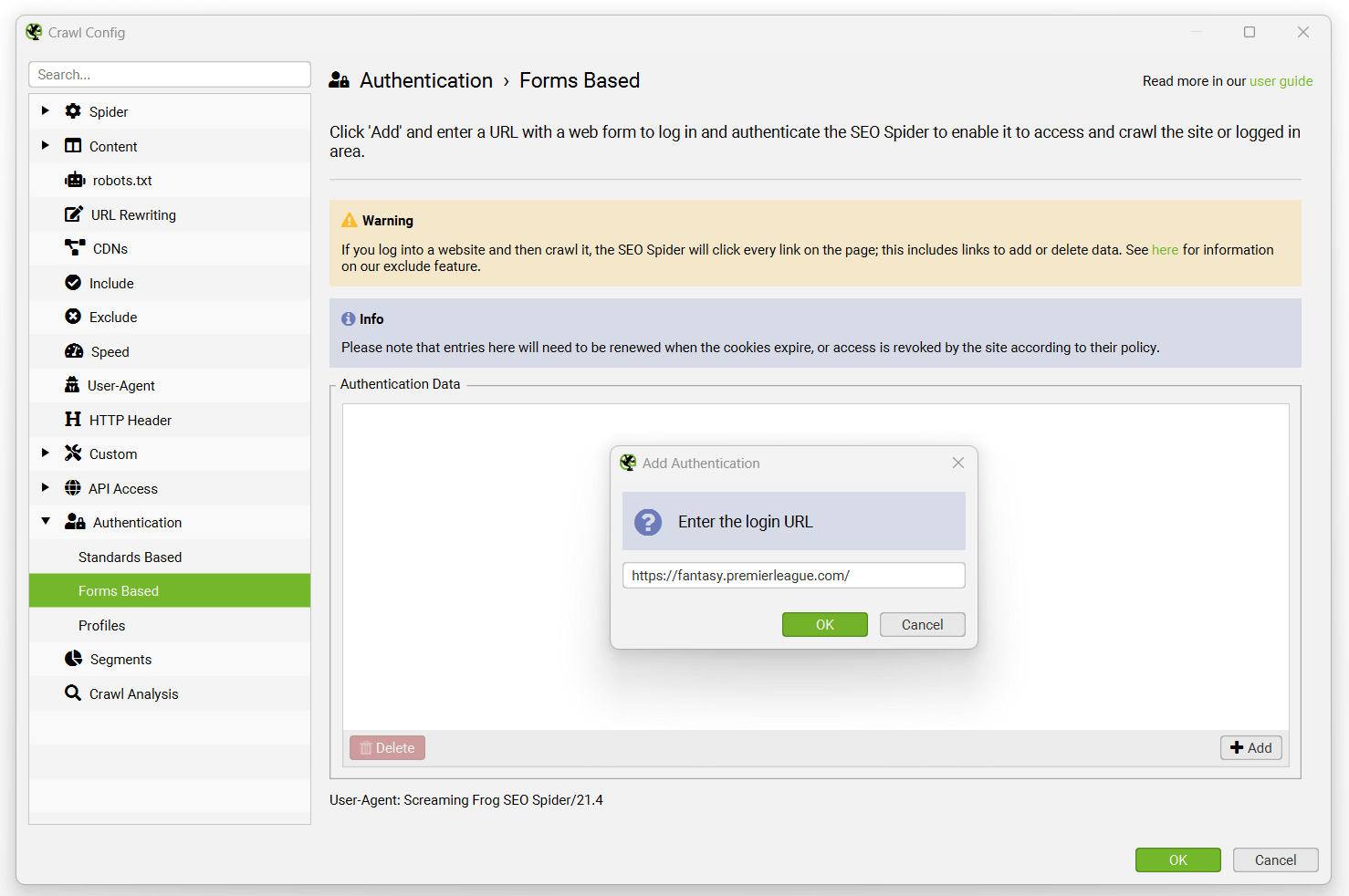

To log in, navigate to ‘Configuration > Authentication’ then switch to the ‘Forms Based’ tab, click the ‘Add’ button, enter the URL for the site you want to crawl, and a browser will pop up allowing you to log in.

Please read our guide on crawling web form password protected sites in our user guide, before using this feature. Some website’s may also require JavaScript rendering to be enabled when logged in to be able to crawl it.

Please note – This is a very powerful feature, and should therefore be used responsibly. The SEO Spider clicks every link on a page; when you’re logged in that may include links to log you out, create posts, install plugins, or even delete data.

Authentication Profiles

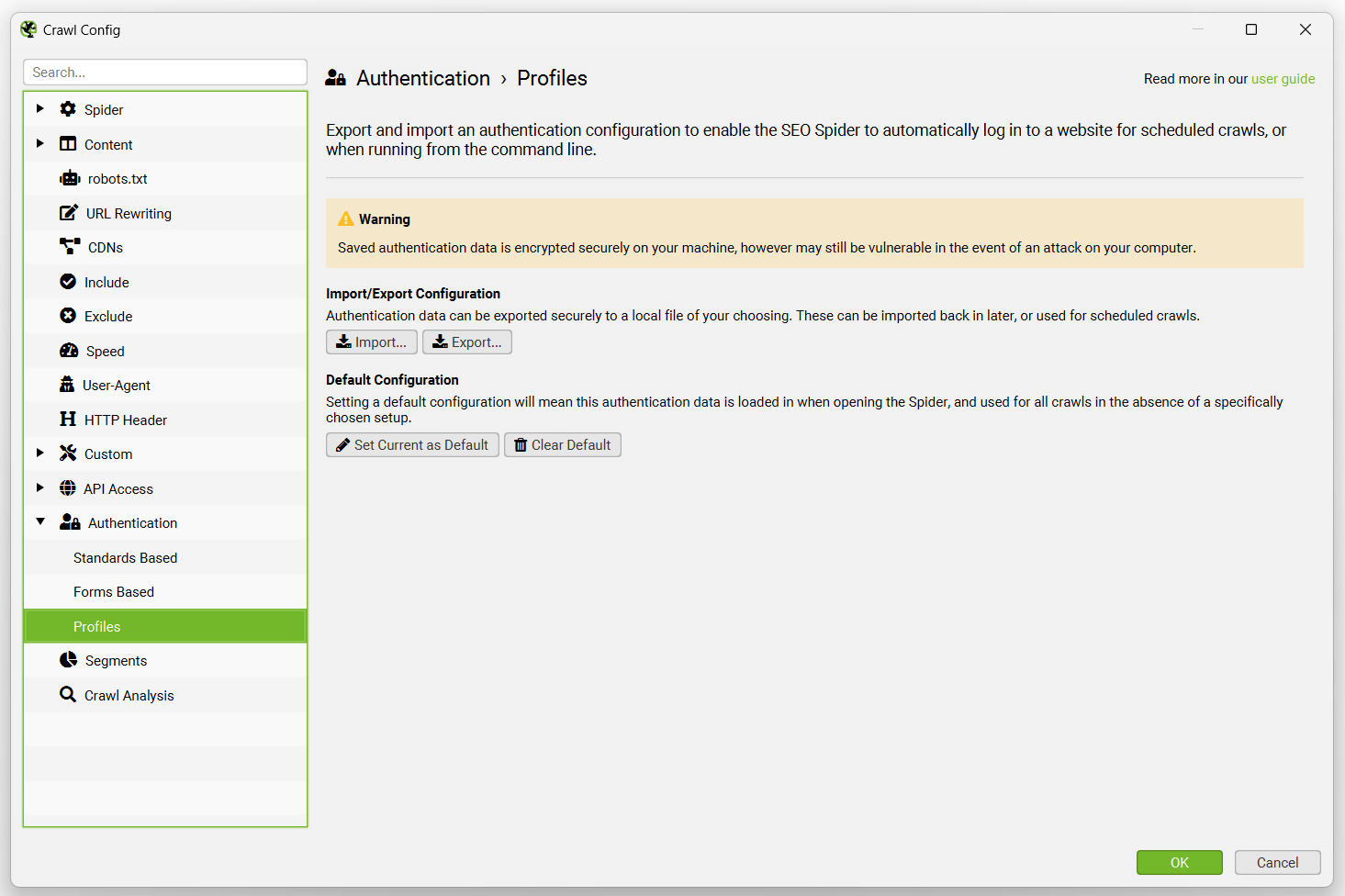

The authentication profiles tab allows you to export an authentication configuration to be used with scheduling, or command line.

This means it’s possible for the SEO Spider to login to standards and web forms based authentication for automated crawls.

When you have authenticated via standards based or web forms authentication in the user interface, you can visit the ‘Profiles’ tab, and export an .seospiderauthconfig file.

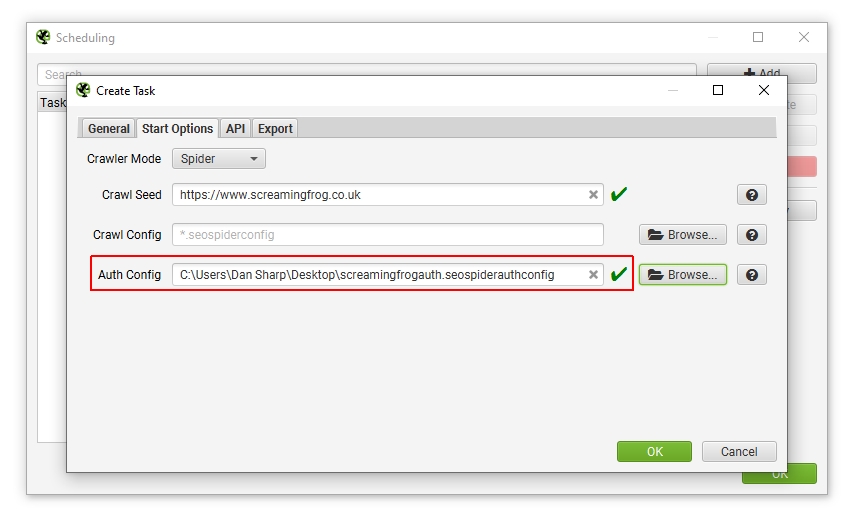

This can be supplied in scheduling via the ‘start options’ tab, or using the ‘auth-config’ argument for the command line as outlined in the CLI options.

Please note – We can’t guarantee that automated web forms authentication will always work, as some websites will expire login tokens or have 2FA etc. Exporting or saving a default authentication profile will store an encrypted version of your authentication credentials on disk using AES-256 Galois/Counter Mode.

Troubleshooting

- Forms based authentication uses the configured User Agent. If you are unable to login, perhaps try this as Chrome or another browser.