Screaming Frog SEO Spider Update – Version 23.0

Dan Sharp

Posted 20 October, 2025 by Dan Sharp in Screaming Frog SEO Spider

Screaming Frog SEO Spider Update – Version 23.0

We’re quite pleased to announce Screaming Frog SEO Spider version 23.0, codenamed internally as ‘Rush Hour’.

The SEO Spider has a number of integrations, and the core of this release is keeping these integrations updated for users to avoid breaking changes, as well as smaller feature updates.

So, let’s take a look at what’s new.

1) Lighthouse & PSI Updated to Insight Audits

Lighthouse and PSI are being updated with the latest improvements to PageSpeed advice, which has now been reflected in the SEO Spider.

This comes as part of the evolution of Lighthouse performance audits and DevTools performance panel insights into consolidated and consistent audits across tooling.

In Lighthouse 13 some audits have been retired, while others have been consolidated or renamed.

The updates are fairly large and include changes to audits and naming in Lighthouse that users have long been familiar with, including breaking changes to the API. For example, previously separate insights around images have been consolidated into a single ‘Improve Image Delivery’ audit.

There are pros and cons to these changes, but after the initial frustration with having to re-learn some processes, the changes do mostly make sense.

The groupings make providing some recommendations more efficient, and it’s still largely possible to get the granular detail required in bulk from consolidated audits.

To get ahead of the (breaking) changes, we have updated our PSI integration to match those across Lighthouse and PSI for consistency.

The changes to PageSpeed Issues are listed below.

7 New Issues –

- Document Request Latency

- Improve Image Delivery

- LCP Request Discovery

- Forced Reflow

- Avoid Enormous Network Payloads

- Network Dependency Tree

- Duplicated JavaScript

11 Removed or Consolidated Issues –

- Defer Offscreen Images

- Preload Key Requests

- Efficiently Encode Images (now part of ‘Improve Image Delivery‘)

- Properly Size Images (now part of ‘Improve Image Delivery‘)

- Serve Images in Next-Gen Formats (now part of ‘Improve Image Delivery‘)

- Use Video Formats for Animated Content (now part of ‘Improve Image Delivery‘)

- Enable Text Compression (now part of ‘Document Request Latency‘)

- Reduce Server Response Times (TTFB) (now part of ‘Document Request Latency‘)

- Avoid Multiple Page Redirects (now part of ‘Document Request Latency‘)

- Preconnect to Required Origins (now part of ‘Network Dependency Tree‘)

- Image Elements Do Not Have Explicit Width & Height (now part of ‘Layout Shift Culprits‘)

6 Renamed Issues –

- Eliminate Render-Blocking Resources (now ‘Render Blocking Requests‘)

- Avoid Excessive DOM Size (now ‘Optimize DOM Size‘)

- Serve Static Assets with an Efficient Cache Policy (now ‘Use Efficient Cache Lifetimes‘)

- Ensure Text Remains Visible During Webfont Load (now ‘Font Display‘)

- Avoid Large Layout Shifts (now ‘Layout Shift Culprits‘)

- Avoid Serving Legacy JavaScript to Modern Browsers (now ‘Legacy JavaScript‘)

Older metrics, such as first meaningful paint, have also been removed.

If you’re running automated crawl reports in Looker Studio and have selected PageSpeed data, then you will find that some columns will not populate after updating.

The next time you ‘edit’ the scheduled crawl task, you will also be required to make some updates due to these breaking changes.

The SEO Spider provides an in-app warning, and advice in our Looker Studio Export Breaking Changes FAQ on how to solve it quickly.

2) Ahrefs v3 API Update

The SEO Spider has been updated to integrate v3 of the Ahrefs API after they announced plans to retire v2 of the API and introduced Ahrefs Connect for apps.

This allows users on any paid plan (not just enterprise) to access data from their latest API via our integration. The format is similar to the previous integration; however, users will be required to re-authenticate using the new OAuth flow introduced by Ahrefs.

You’re able to pull metrics around backlinks, referring domains, URL rating, domain rating, organic traffic, keywords, cost and more.

Metrics have been added, removed and renamed where appropriate. Read more on the Ahrefs integration in our user guide.

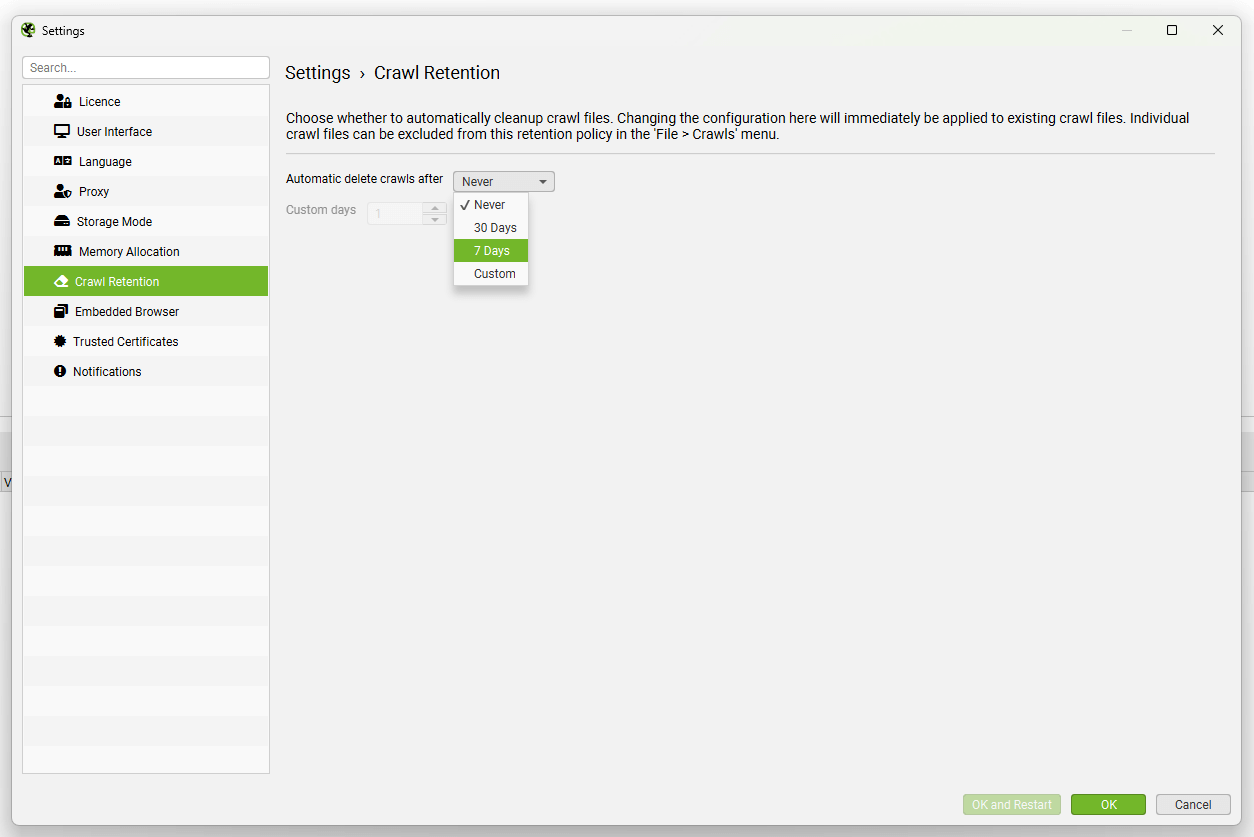

3) Auto-Deleting Crawls (Crawl Retention)

Crawls are automatically saved and available to be opened or deleted via the ‘File > Crawls’ menu in default database storage mode. To date, the only way to delete database crawls was to delete them manually via this dialog.

However, users are now able to automate deleting crawls via new ‘Crawl Retention‘ settings available in ‘File > Settings > Crawl Retention’.

You do not need to worry about disappearing crawls, as by default the crawl retention settings are set to ‘Never’ automatically delete crawls.

The crawl retention functionality allows users to automatically delete crawls after a period of time, which can be useful for anyone who doesn’t want to keep crawls but does want to take advantage of the scale that database storage mode offers (over memory storage).

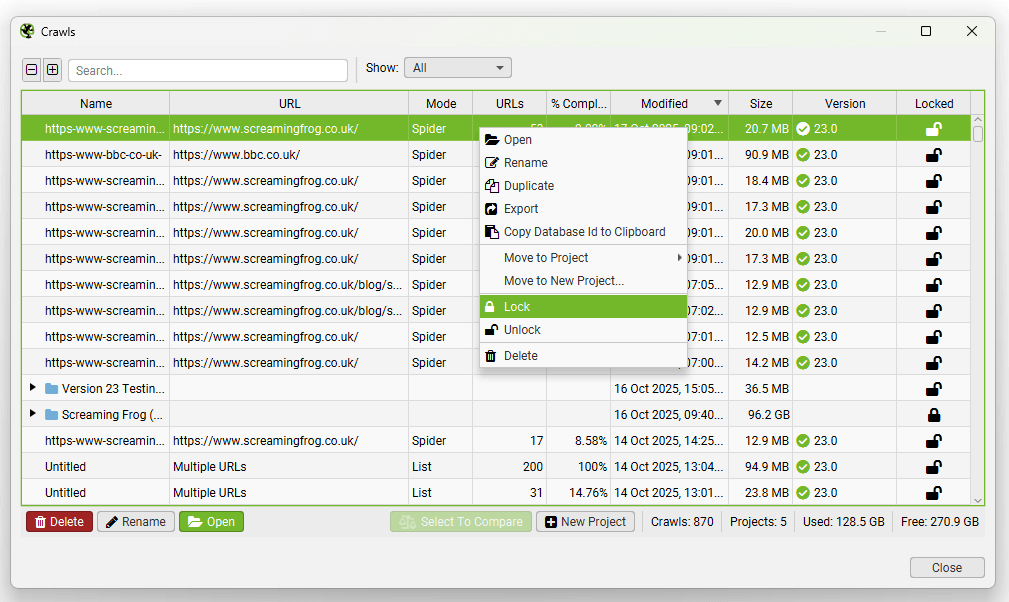

As part of this feature, we also introduced the ability to ‘Lock’ projects or specific crawls in the ‘File > Crawls’ menu from being deleted. If you wish to lock a single crawl or all crawls in a project, just right click and select ‘Lock’.

For project folders, this will lock all existing and future crawl files, including scheduled crawls, from being automatically deleted via the retention policy settings.

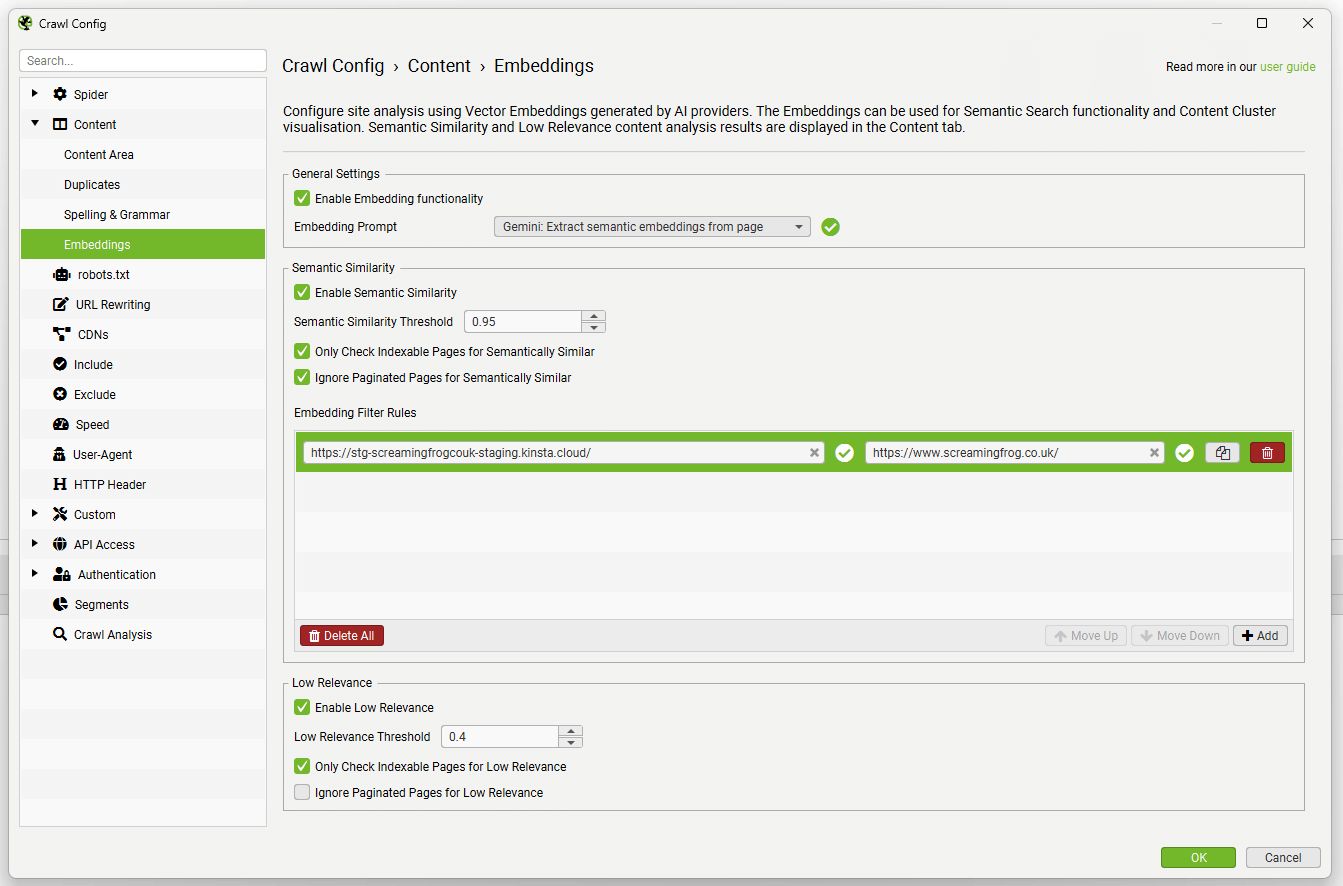

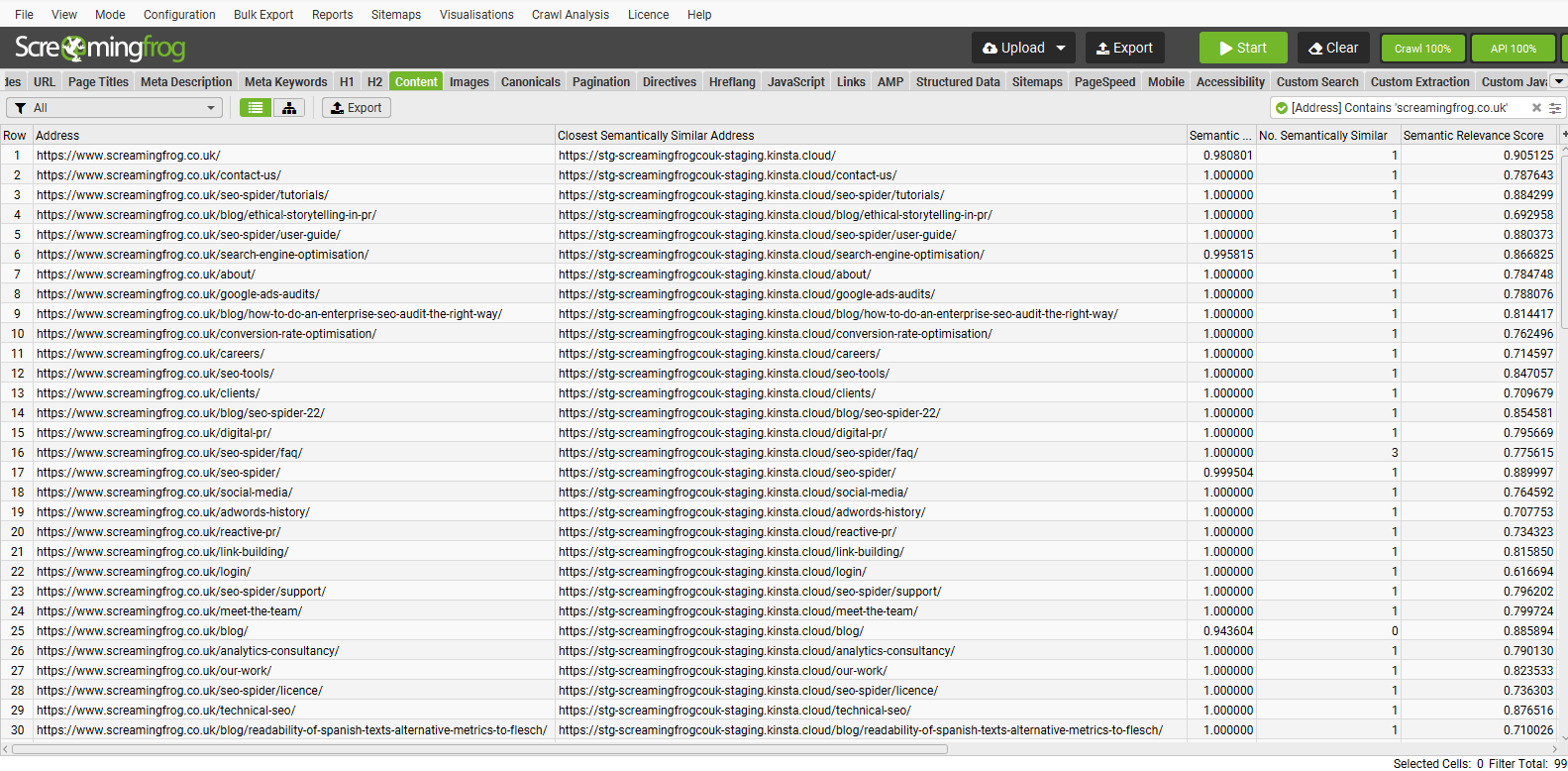

4) Semantic Similarity Embedding Rules

You can now set embedding rules via ‘Config > Content > Embeddings’, which allows you to define URL patterns for semantic similarity analysis.

This means if you’re using vector embeddings for redirect mapping, as an example, you can add a rule to only find semantic matches for the staging site on the live website (so pages from the staging website itself are not considered as well).

In the above, the closest semantically similar address can only be the staging site for the live site.

This can also be used in a variety of other ways, such as if you wanted to see the closest matches between two specific areas of a website, or between a page and multiple external pages.

5) Display All Links in Visualisations

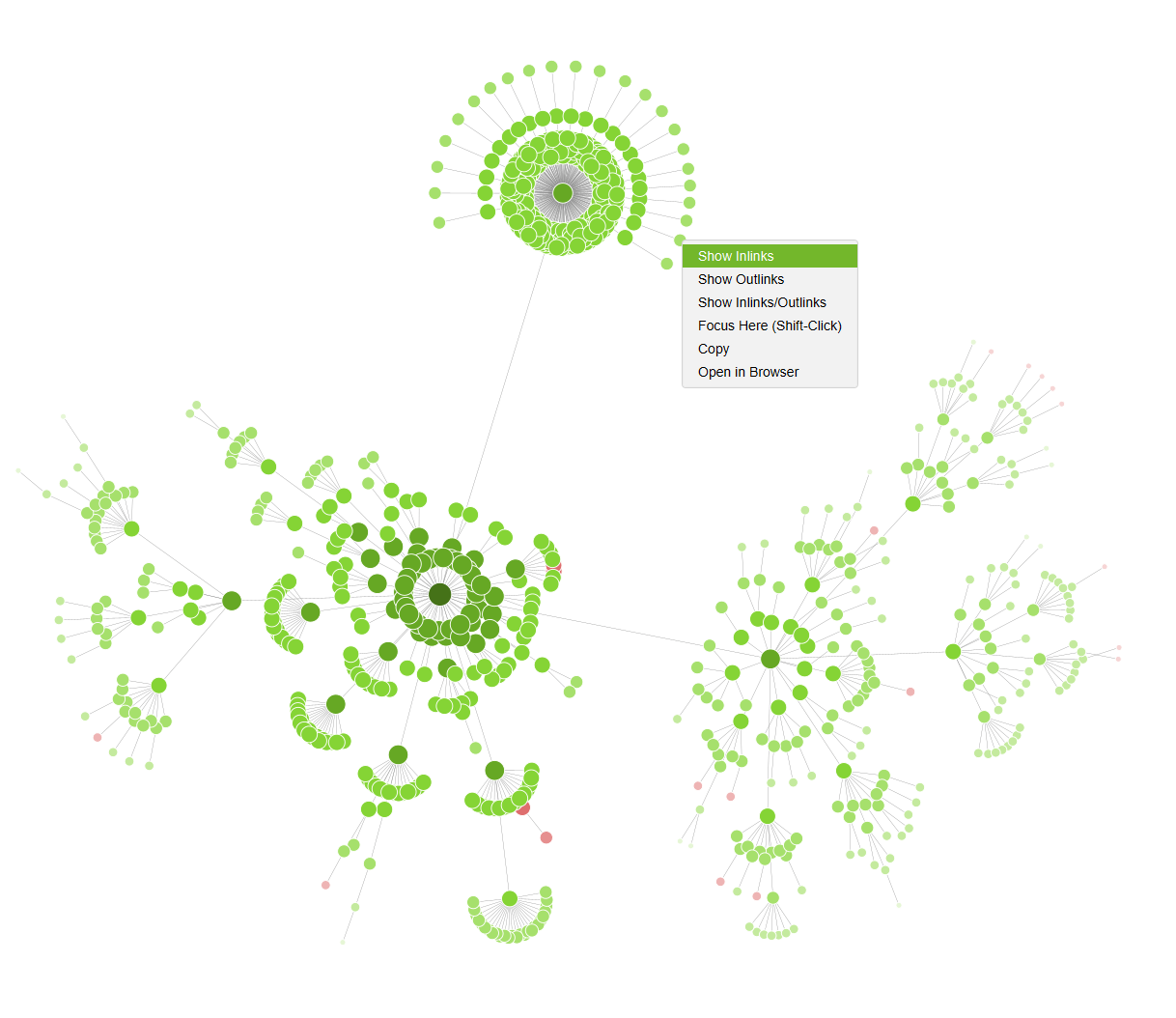

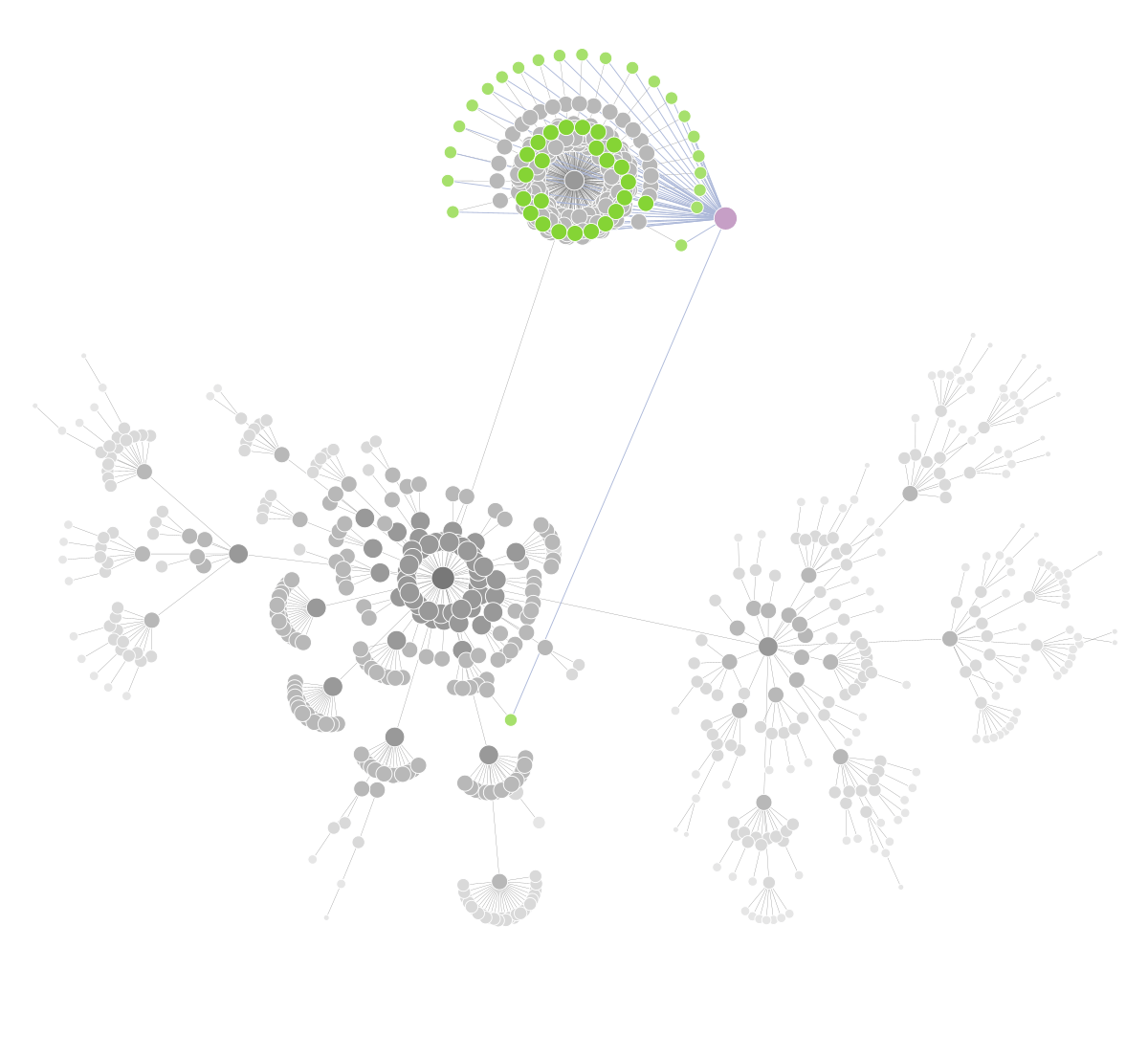

It’s now possible to see all inlink and outlink relationships in our site visualisations.

You can right-click on a node and select to ‘Show Inlinks’, ‘Show Inlinks to Children’ of a node, or perform the same for ‘Outlinks’.

This will update the visualisation to show all incoming links, which can be useful when analysing internal linking to a page or a section of a website –

Linking nodes are highlighted in green, while other nodes are faded to grey.

This option is available across all force-directed diagrams, including the 3D visualisations.

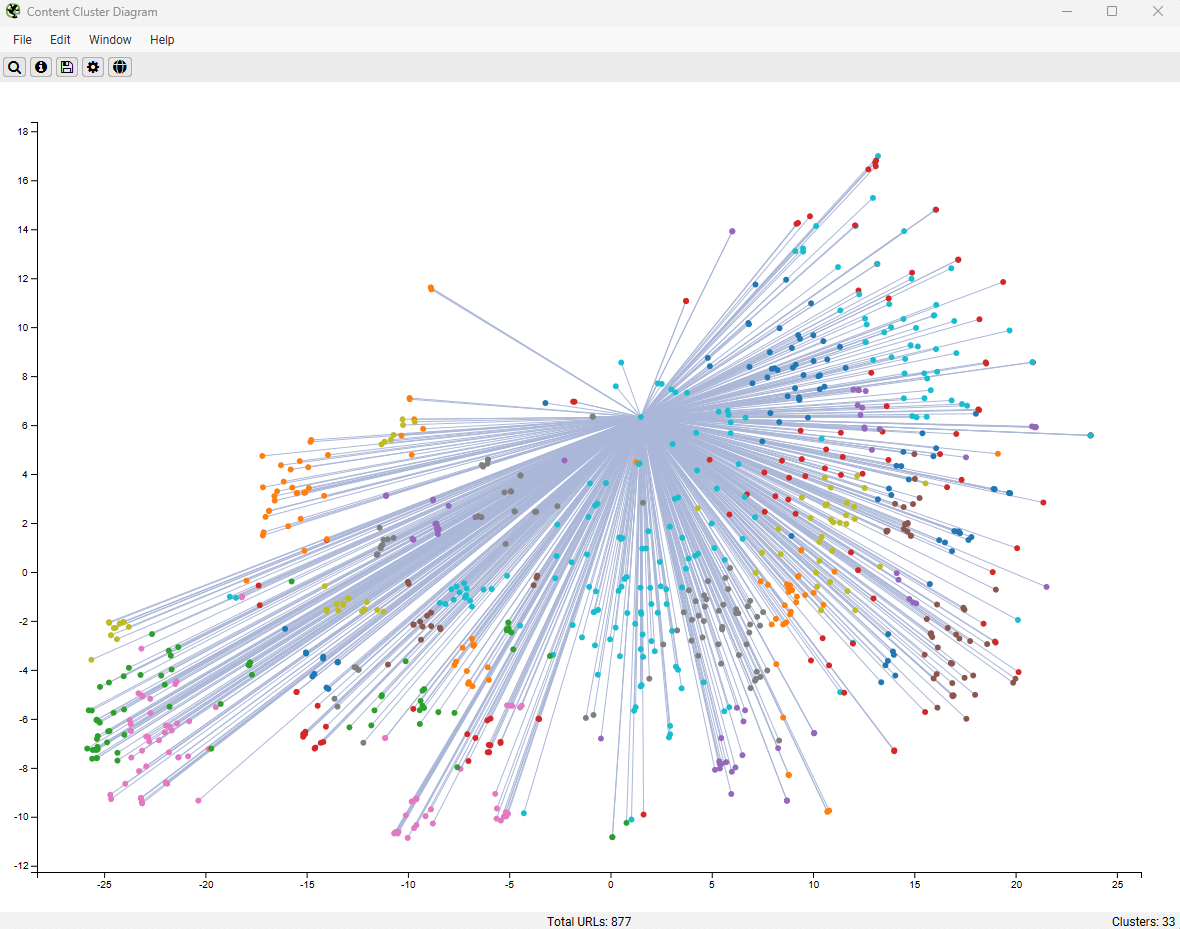

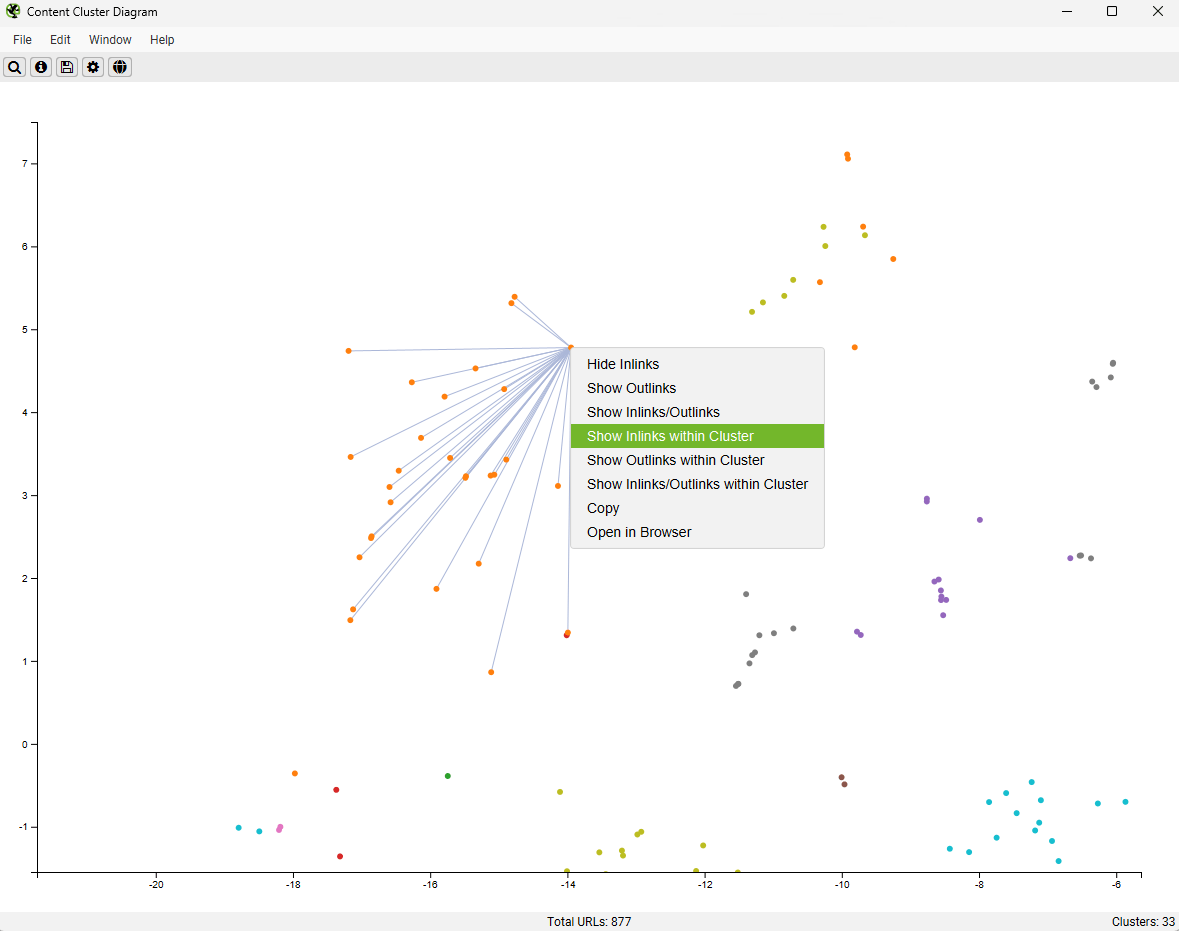

6) Display Links in Semantic Content Cluster Diagram

Similar to site visualisations, you’re now also able to right-click and ‘Show Inlinks’ or outlinks within the semantic Content Cluster Diagram.

As well as viewing all internal links to a page, you can also select to ‘Show Inlinks Within Cluster’, to see if a page is benefiting from links from semantically similar pages.

This can be a useful way to visually identify internal link opportunities or gaps in internal linking based upon semantics.

Other Updates

Version 23.0 also includes a number of smaller updates and bug fixes.

- Limit Crawl Total Per Subdomain – Under ‘Config > Spider > Limits’ you can limit the number of URLs crawled per subdomain. This can be useful in a variety of scenarios, such as crawling a sample number of URLs from X number of domains in list mode.

- Improved Heading Counts – The ‘Occurrences’ count in the h1 and h2 tabs was limited to ‘2’, as only two headings are extracted by default. While only the first two h1 and h2 headings will continue to be extracted, the occurrence number will show the total number of each on a page.

- Move Up & Down Buttons for Custom Search, Extraction & JS for Ordering – The ordering (top to bottom) impacts how they are displayed in columns in tabs (from left to right), so this can now be adjusted without having to delete and add.

- Configurable Percent Encoding of URLs – While percent encoding of URLs is uppercase, a small number of servers will redirect to lowercase only and error. This is therefore configurable in ‘Config > URL Rewriting’.

- Irish Language Spelling & Grammar Support – Just for the craic!

- Updated AI Models for System Prompts & JS Snippets – OpenAI system prompts have been updated to use ‘gpt-5-mini’, Gemini to ‘gemini-2.5-flash’ and Anthropic to ‘claude-sonnet-4-5’. As always, we recommend reviewing these models, and costs prior to use.

- New Exports & Reports – There’s a new ‘All Error Inlinks’ bulk exports under ‘Bulk Export > Response Codes > Internal/External’ which combine no response, 4XX and 5XX errors. There is also a new ‘Redirects to Error’ report under ‘Reports > Redirects’, which includes any redirects which end up blocked, no response, 4XX or 5XX error.

- Redirection (HTTP Refresh) Filter – While these were reported and followed, a new filter has been introduced to better report these.

That’s everything for version 23.0!

Thanks to everyone for their continued support, feature requests and feedback. Please let us know if you experience any issues with this latest update via our support.

Small Update – Version 23.1 Released 18th November 2025

We have just released a small update to version 23.1 of the SEO Spider. This release is mainly bug fixes and small improvements –

- Add Shopify web bot auth header names to presets in HTTP Header config.

- Add ‘Indexability’ columns to ‘Directives’ tab.

- Mark Google rich result feature ‘Practice Problems’ as deprecated.

- Remove deprecation warning for ‘Book Actions’ Google rich result feature.

- Updated Gemini image generation default model.

- Rev to Java 21.0.9.

- Fixed issue not showing srcset images in lower ‘Image Details’ tab, when ‘Extract Images from srcset Attribute’ not enabled.

- Fixed ‘Reset Columns for All Tables’ not working in certain scenarios.

- Fixed issue with Multi-Export hanging when segments are configured.

- Fixed issue with old configs defaulting to percent encoding mode ‘none’, rather than upppercase.

- Fixed right-click Inlinks/Outlinks etc not working in some visualisations.

- Fixed the content cluster diagram axis in dark mode.

- Fixed spell check happening on partial words.

- Fixed bug with GA/GSC accounts from another instance affecting each other.

- Fixed ‘Blocked by Robots.txt’ URLs also appearing as ‘No Response’ filter.

- Fixed various unique crashes.

Small Update – Version 23.2 Released 16 December 2025

We have just released a small update to version 23.2 of the SEO Spider. This release is mainly bug fixes and small improvements –

- Re-introduce the PageSpeed Opportunities Summary report. Unfortunately, not all data is available for all PageSpeed opportunities.

- Updated trusted certificate discovery, so the site visited is now configurable.

- Updated the AlsoAsked Custom JS Snippet.

- Updated log4j to version 2.25.2.

- Fixed cells with green text being invisible when highlighted.

- Fixed –load-crawl uses wrong config to validate exports.

- Fixed issue with showing too many Google Chrome Console errors.

- Fixed issue with ‘Low Memory’ warning not showing in some low memory situations.

- Fixed issue with crawls sometimes freezing on macOS.

- Fixed issue with ‘Archive Website’ causing ‘undefined’ image URL to be loaded.

- Fixed issue with crawl comparison fogetting config.

- Fixed issue with Ahrefs failing for values outside of 32bit signed range.

- Fixed issue with visual custom extraction using the saved user agent rather than from the current editing config.

- Fixed issue with missing argument for option: crawl-google-sheet in Scheduled Task.

- Fixed various unique crashes.

Small Update – Version 23.3 Released 18 February 2026

We have just released a small update to version 23.3 of the SEO Spider. This release is mainly bug fixes and small improvements –

- With Google’s update on Googlebot’s file size limits actually being 2mb rather than previously communicated 15mb, we have updated our SEO issues in the tool to ‘HTML Document Over 2MB‘ and ‘Resource Over 2mb‘ and made the limits configurable in ‘Config > Spider > Preferences’.

- Removed deprecated ‘Gemini text-embedding-004’ and replaced with ‘gemini-embedding-001’ for default Gemini embedding prompts.

- Created a ‘Non-Indexable Page Inlinks Only‘ bulk export, available via ‘Bulk Export > Links’.

- Rev Java to 21.0.10.

- Updated Log4j to 2.25.3 to fix TLS hostname issue.

- Fixed issue with only account name being used for command line GSC & GA4 crawls and account properties being ignored.

- Fixed issue with Content Cluster Diagram segment names not being escaped.

- Fixed issue with Cluster 0 showing in Content Cluster Diagram.

- Fixed various unique crashes.

These are great additions, especially the visualization updates.

However, I’d really like to be able to apply custom filters in GA4 to view specific session sources.

For example, I want to see sessions where the source contains a specific word (like Bing or ChatGPT)

It would be great if you could add this functionality as well. Thanks!

Hot dang, the heading count is finally more than 2! I’m sure it’s been like that since I first started using the tool almost a decade ago. Everything else is great and all, but finally being able to see how many headings there are is really nice.

What a Monday surprise. Great work from the SF team!

The crawl retention settings are exactly what I needed for quarterly site reviews—auto-delete old crawls.

#Irish Language Spelling & Grammar Support – Just for the craic!

When does Dunglish comes in? ;)

Yeah! Really cool new ScreamingFrog Crawling features! Thanks, can’t wait to try the new visualisation features. That is really helpful to convince stakeholders which parts of the website should become more attention or which should be consolidated.

I noticed that in the latest version (V23), URLs disallowed in robots.txt are appearing under the “Response Codes: Internal Blocked by Robots.txt” category, which is correct. However, the exact same list is also showing up under the “Response Codes: Internal No Response” category, which wasn’t the case in any of the previous versions.

Is this a bug or intentional?

Hi Lorin, thanks for the comment – it’s a bug! If you drop an email into support@ and we’ll send you a beta with a fix, Cheers!

Hi Lorin,

Thanks again for reporting this issue.

This has been fixed and is available in 23.1 available now.

Cheers.

Dan

Never questioned my Screaming Frog annual fee. You rock since the beginning ! Thank to the entire team.

Just wanted to give a big shoutout to Dan Sharp and the whole Screaming Frog crew. Year after year, you keep surprising us with amazing updates. This tool is pure gold. Thanks for everything you do!

Anyone else having issues with the scheduling, and pushing to Google Sheet / Looker Studio? Keeps crashing SF for me (just shuts down when I try to add or edit an existing scheduled crawl).

Emailed support@ – hopefully fixed soon?

Hey Steve,

I understand we replied earlier to you with a fix, shout if you have any further issues at all.

Cheers.

Dan

Great job

Amazing update.

Prediction: won’t be long before the legends at Screaming Frog build visualizations for defined inlink relationships– semantic edges between pages / nodes. AI-enabled, knowledge-graph enabled. Imagining an option in configuration either to set for Anchor text or the far better method, supervised-learning, contextually aware tag (from a defined ontology) labelling the relationship pages/nodes. As we all know anchors are often randomly applied by content creators, web ops folks etc. and “click here” and “learn more” ain’t helping retrieval systems or even the user very much.

A visualization of the link graph at a semantic level will drive a lot of value from those who have move their orgs towards strategic structured knowledge.

Link equity can be defined as contextual boost rather than a “link juice” boost.

Great update! I’ve been using Screaming Frog for technical audits, and version 23.0 feels faster and more stable. The new crawl summary and UX improvements make it even better for on-page SEO analysis. Thanks for keeping this tool evolving!

Great update! Really excited to see all these new features, especially the JavaScript rendering improvements and the enhanced redirect chain analysis.

Screaming Frog continues to be an essential tool in my SEO toolkit. As a freelance SEO consultant, I’m constantly impressed by how the tool keeps evolving with new features that actually solve real-world crawling and auditing challenges.

Thanks to the team for the continued development and innovation!

Valentin

For me the Crawl retention feature update was much needed, knowing now i can lock them.

Love this release – keeping Lighthouse/PSI and the Ahrefs integration fully up to date plus adding crawl retention and richer link visualisations are exactly the kind of quality-of-life updates that matter in real audits. Thanks to the whole team for continuously polishing a tool many of us use every day.

The crawl retention feature is a great addition! And it is well timed. I was just working on deleting old crawls the other day. It would help a ton though if this could be controlled via the CLI as well!

Great Job!

Thanks

Please add Matomo APIs, thank yo!

Hi SF Team, any plan to integrate Semrush API as you did for Ahref?

Hi David,

Yeah, we do have this on the ‘todo’ list as well.

Cheers for the suggestion!

Dan

Great update, Dan. The Semantic Similarity Embedding Rules are a massive time-saver for large-scale migrations. I used to do the staging vs. live matching via Python scripts externally, so having this native capability to filter matches specifically for redirect mapping is a game-changer. Also, the update to the Ahrefs API v3 was much needed. Cheers from Argentina

Love this release — the “Insight Audits” update for Lighthouse/PSI is a big one, and I really appreciate how clearly you’ve mapped what’s new/removed/renamed. The Ahrefs v3 OAuth update is also a lifesaver (anything that prevents surprise breaking changes in reporting gets a big YES from me.)

I really like that all the projects are now saved automatically. Well done guys!

Well done, thanks a lot!

Nice to see this update addressing real-world issues rather than just adding features. The correction to Googlebot’s 2MB limits is especially important, that change could easily catch site owners off guard. Also great to see improvements to exports and stability fixes, plus the Java and Log4j updates for security and compatibility. Small release, but very practical