So You’ve Found JavaScript Issues in Your Site Crawl. What Now?

Amanda King

Posted 1 September, 2023 by Amanda King in Screaming Frog SEO Spider

So You’ve Found JavaScript Issues in Your Site Crawl. What Now?

Vue. React. Angular. There are more JavaScript frameworks and libraries than there have ever been — though don’t quote me on that — and there are new ones coming up every day.

As much as SEO professionals may sometimes hope the Internet entirely abandons JavaScript, it’s here to stay, and we need to be able to debug it in a way that is useful to the developers we’re working with. A way that helps them update their code in a targeted manor, rather than a blanket, unhelpful statement from their SEO that they should “avoid large layout shifts” or to “reduce unused JavaScript.”

This guide will cover a sampling of some of the more common JavaScript issues, how to go a step further in your debug process to share more nuance with your developers about the source of the problem, and how to use your own judgement to gauge the degree of the problem, if it is in fact one.

This article is a guest contribution from Amanda King of FLOQ.

A Configuration Disclaimer

Before we dive into this, if you’re using the Screaming Frog SEO Spider for your initial site crawl, you will need to make sure your configuration is set up in a way that allows this kind of JavaScript analysis. What that means is:

- Storing both HTML and rendered HTML (Spider > Extraction > HTML)

- Rendering in JavaScript with at least the timeout default of 5 seconds (Spider > Rendering)

- Connecting to the PageSpeed API (Configuration > API Access > PageSpeed Insights)

The JavaScript Report

In my decade of experience in SEO, I’ve found very few websites that actually change “on the fly” as it were, with things like the page title, meta title, description, canonical or other page-level directives. As we can see in the screenshot above for my crawl of theupside.com for the purposes of this article, that holds true here as well.

One of the most important pieces of information I look at when evaluating the relative importance of JavaScript issues is the “% of total” column. If an issue impacts 0.28% of all pages, here, only one page, it will likely go to the bottom of my list of fixes. The only way that would change would be if that issue affected the homepage, or one of their high-revenue driving pages, and even then, it’d still be lower on the list than other issues that were affecting more of the website.

As we can see, though, there’s a lot of changing around of the page content with JavaScript for this site, as “Contains JavaScript content” affects 99.72% of pages. So how do we debug this further?

This is where storing the HTML and the rendered HTML in your Screaming Frog come in handy for a technical SEO deep-dive.

In Screaming Frog, when you choose a page, and click the “View Source” tab in the page-level menu, you’re able to see the differences between the initial HTML and the client-side rendered HTML. When you choose to “Show Differences” the HTML is highlighted in red and green where there are removals or additions from the original source code.

In this example, we can see by looking at the source code, the additions, and the names of classes, that it looks like the filtering used to display products on this page is generated by JavaScript. Taking that a step further, we know this website is built using BigCommerce, and the filtering functionality may be managed by a plugin.

Knowing that, we can go to the developers with a specific question about the filtering on the page, and asking what the scope and effort would be if we wanted (or felt we needed) to change how that was built.

You can also use this report to determine the library or framework being used, as usually it will be referenced in the .js file reference in the <head>, the naming conventions of the classes, or in the annotations of the HTML. If that doesn’t give you a strong enough indication, there are a number of online tools and extensions that can provide stronger hints, like Wappalyser.

The PageSpeed Insights Report

The PageSpeed Insights report in the SEO Spider is a goldmine for details on the standard opportunities reported by the web version at pagespeed.web.dev. Again we see one of the major advantages of the SEO Spider win out: the ability to aggregate issues across the website and see the scale of the impact of a particular issue.

Reduce Unused JavaScript

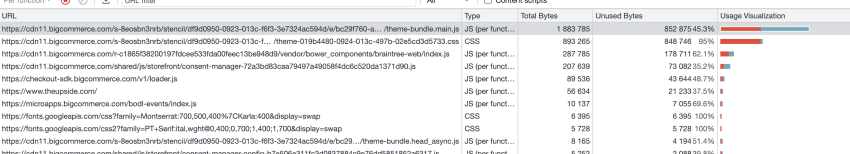

The mandate to “reduce unused JavaScript” is one of the more common I’ve seen in the Page Speed Insights report. But what recommendation does that actually translate to? In the example above, we see a few things:

- The biggest potential reduction comes from a BigCommerce file, the JavaScript associated with the main theme (as deduced by the full file name, not shown in the screenshot above).

- Most of the rest of the opportunities come from third-party apps, including Facebook, Vimeo and Hotjar. The best we may be able to do with these scripts is make sure they’re up-to-date and are only installed on the pages they’re needed on.

If we want to go further to understand what the unused JavaScript within that BigCommerce file is, I turn to the coverage report.

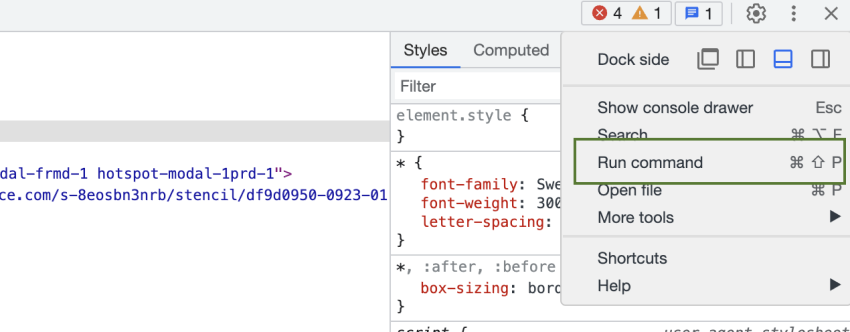

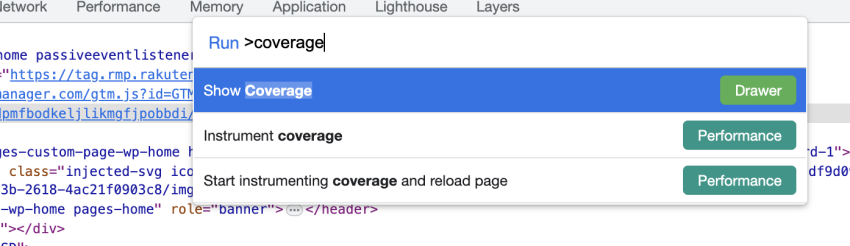

To get there, open up the page in your browser, and go to the developer console. Then you’ll want to run a command. Rather than trying to remember the shortcut, I go to the three dot menu and click “Run command”.

From there, I type coverage, and click “Show Coverage”.

It will return a screen like the below, where you can see how much of the JavaScript is actually used.

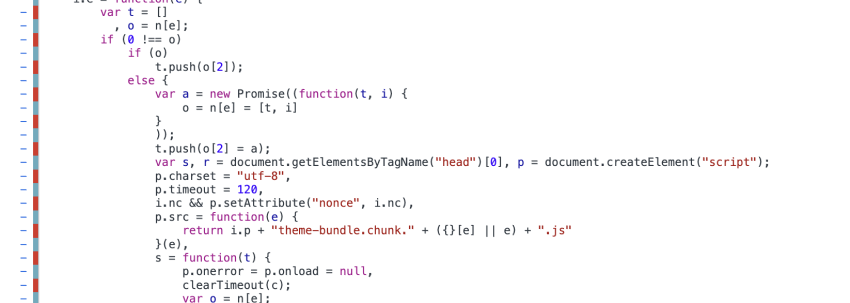

When you choose the script you want to analyse, you should see detail of what lines or functions within that JavaScript file are used or unused, as indicated by the teal and red next to the code line number.

If I was the SEO for The Upside, I would take this and go to the developer, and ask them what they think is happening here; to me there is no particular pattern in this coverage report detail I can discern. At best, I’d guess it may be some sort of file compression error.

Sometimes, though, these reports are pretty clear cut. For example, I had a client where the “unused” JavaScript was actually the part of the script that, after checking the region, displayed the correct version of translated content. Obviously, we didn’t want to remove the scripts that managed that element of internationalisation, but it opened up a conversation amongst the developers about how that could be done without relying so heavily on JavaScript, or how it could be done more selectively, rather than broad-stroking it as they were currently, and having all versions of the translated content ready and waiting in the wings.

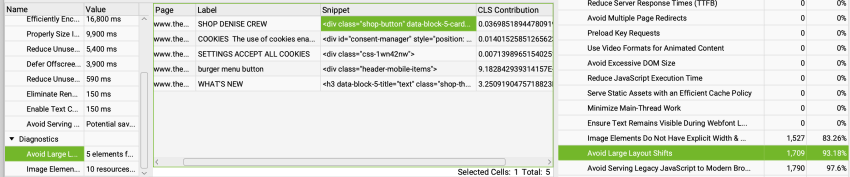

Avoid Large Layout Shifts

Large layout shifts are another common JavaScript issue on many websites. Screaming Frog SEO Spider reports will give you the tools to know exactly what elements are causing the layout shifts. You can see this report if you go to the page level menu tab PageSpeed Details > Diagnostics > Avoid Large Layout Shifts. Alternatively, you can click on the Avoid Large Layout Shifts in the flyout menu under PageSpeed to see the affected pages.

For many of us, though, diagnosing large layout shifts is easier to make a business case for addressing when we can visualise it. Some tools to help with that include:

- Webpagetest.org

- Layoutshiftgifgenerator

- The Performance Report in Chrome (as described in the Core Web Vitals audit article)

Two major culprits I find for large layout shifts are externally-hosted fonts (usually Google fonts) and images. Cookie consent or sale notification banners are also becoming common causes of layout shifts.

A few options to address the layout shift caused by non-websafe fonts loading after the page has started loading include:

- Using rel=preload to load the font earlier

- Hosting the font locally rather than calling it externally

For images, a few options are:

- Checking height and width are set on the images, and if they aren’t, seeing if the CMS will allow for it (not all do, like Hubspot doesn’t allow for it at the time of writing).

- Using an image placeholder library, like holder.js or placeholder.js

Often when I make business cases around something like large layout shifts and fixing it, I speak to perceived load time and the reduction in conversion rate that usually comes with a longer load time of a page, rather than “SEO benefits.”

We all know, yes, it will benefit SEO, many times, though, that requires working with and advocating for other departments and their goals as well. As long as the job gets done, as SEO’s, we should be content.

Conclusion

Having a clear and valuable conversation with developers about what specifically in the JavaScript on the website is giving you the biggest headache, and a direction to look in is absolutely something to aspire to. As technical SEO’s, we should be doing everything in our power to make our development team’s job easy, and using the Screaming Frog SEO Spider to debug the JavaScript is a fantastic step towards a stronger relationship with developers, and the website itself.

Great stuff. I love when, besides learning the main subject, I learn about some other tools out there that I can use. Great job on the content.

Thanks Kevin, glad it’s been helpful!

Amazing information. Indeed JS sites can be quite tricky to crawl and to do SEO

Amanda thank you so much for making such a useful article. Some issues I had with my javascript destroyed my work and google changed my rank to a much lower one.

I love reading such insightful articles on ScreamingFrog! Thanks a lot Amanda for sharing an article which I’ve personally had an issue with. Sometimes JS and SEO do not go well so you need to be extra attentive with the implementation of on-page SEO when dealing with javascript sites.

You’re welcome, I’m glad you found it helpful!

Super helpful! Exactly what I was looking for. Thank you for putting the article together, Amanda!

You’re welcome Senid, I’m glad it offered some answers you were looking for.

Is this guide still up-to-date?